Abstract

This research is a comprehensive exploration of developing and implementing an artificial intelligence (AI) application on humanoid robots, focusing on the Pepper robot. Through literature reviews, interviews, and surveys, this project seeks to understand the use of AI in humanoid robots, the development process and challenges, and the potential implications of AI on humanoid robots. The development and implementation of the AI application are discussed in detail, including the system architecture, components, and integration of the AI into the Pepper robot. Additionally, the research examines how the application has been used to improve the functionality of the Pepper robot and the ethical considerations of the application. The findings of this project demonstrate that AI can be successfully implemented into humanoid robots to create an effective, autonomous system. With further development, the use of AI in humanoid robots has the potential to revolutionize the robotics industry)

Voice recognition technology has rapidly advanced in recent years, with applications ranging from virtual assistants to smart home devices. One area where voice recognition technology has the potential to make a significant impact is robotics. By enabling robots to understand and respond to voice commands, voice recognition technology can greatly enhance the usability and functionality of robots. In this dissertation, we present an AI voice recognition system for the Pepper robot that enables it to follow commands spoken by a user. Our system uses state-of-the-art machine learning algorithms to recognize and interpret voice commands accurately and integrates with the Pepper robot’s movement code to enable it to perform various tasks. We evaluate our system’s performance on various voice commands and demonstrate its effectiveness in real-world scenarios. Our work represents a significant step forward in the development of voice-enabled robotics and has the potential to greatly enhance the usability and accessibility

Chapter 1: Introduction

Robotics and Artificial Intelligence (AI) are two of the modern era’s fastest-evolving technologies, revolutionizing people’s lives and work. According to Chakraborty et al. (2002), artificial intelligence (AI) replicates human Intelligence in machines programmed to learn, reason, and make decisions. Contrarily, robotics deals with creating, maintaining, and controlling machines that can work autonomously or under the direction of a human. Creating robots that can move and interact with their surroundings in a way that is intuitive and natural for people is one of the main difficulties in robotics (Mondal, 2020). Pepper, a humanoid robot created by SoftBank Robotics, is the subject of our investigation into the application of AI and robotics for movement and voice recognition. Pepper’s sensors, cameras, microphones, and speakers allow it to intuitively understand and respond to its human companions’ actions, expressions, and dialogue. This project aims to implement a Python system using AI algorithms that will enable Pepper to identify and react to user input, such as hand gestures and vocal instructions.

For precise voice command interpretation, the system employs cutting-edge machine learning algorithms(Rajawat et al., 2021). The movement code of the Pepper robot is combined with these algorithms to make it capable of a wide variety of actions. This enables us to test our system’s responsiveness to a wide range of voice requests and prove its efficacy. Voice commands like “go forward,” “turn left,” and “stop” can be recognized by the system and utilized to control the robot’s actions. This system’s ability to recognize and react to people’s feelings is also strong. Complex algorithms for recognizing faces and reading emotions are used for this purpose. The robot can now identify a person’s face, read their expressions, and react appropriately. In particular, the robot can be programmed to detect a person’s happiness, respond with a smile or anger, and respond with soothing words. The development of this system represents a significant step forward in the development of intuitive and natural human-robot interaction and has the potential to greatly enhance the usability and accessibility of robots in a wide range of settings for the end user. For example, robots can be used in healthcare settings to assist elderly patients with daily activities or even in schools and universities to help teach and guide students.

In conclusion, the use of AI and robotics has the potential to revolutionize the way humans interact with machines. By developing systems such as the one presented in this project, robots can become more effective and intuitive assistants in various settings. By combining sophisticated algorithms with intuitive movement and voice recognition, robots can become more accessible and useful tools for everyday life.

SoftBank Robotics Company

SoftBank Robotics was founded in 2012 as a Japanese robotics company. The company is a subsidiary of the SoftBank Group, a Japanese multinational corporation. SoftBank Robotics’ Pepper robot is a humanoid creature to provide a more seamless and natural user experience (Tuomi, Tussyadiah, & Hanna, 2021). The company has developed humanoid robots, robots for education, and robots for customer service. Businesses and other institutions worldwide have widely adopted Pepper and other robots from SoftBank Robotics. In recent years, the company’s robots have become increasingly popular thanks to their ability to capitalize on the rising tide of automated customer assistance. However, there have been challenges for the company to overcome, such as the high cost of growth and development, the presence of other robotics companies, and the difficulty of creating robots with natural and intuitive human interaction. Pepper Robot is intelligent thanks to AI (1.2). How advanced is the Pepper robot at this point when it comes to AI? Can the results of Pepper’s AI implementation? The current artificial Intelligence of the Pepper

robot’s main goal is to make it possible to have fluent, natural conversations with people. Horák, Rambousek, and Medve (2019) report that the robot can distinguish between different voices and emotions and react to touch and motion. This makes the robot more humanlike in its ability to follow instructions and complete tasks. The consequences of equipping Pepper with AI are substantial. The robot, for instance, may pick up information from its surroundings and use that to modify its behavior in response to new circumstances. Due to the robot’s ability to self-correct, this could significantly cut down on the time and energy needed for training and upkeep. Furthermore, AI could improve the robot’s ability to comprehend and communicate with humans.

AI Algorithm 1.2

Artificial Intelligence (AI) is a catchall term for studying how to make computers intelligent in human ways. This includes giving them the ability to reason, plan, learn, and perceive, among other things.

According to Singh, Banga, and Yingthawornsuk (2022), the word “AI” now incorporates the entire conceptualization of an intelligent machine regarding both operational and social effects. The development of ubiquitous sensing systems and wearable technologies is pushing us closer to realizing intelligent embedded systems that will seamlessly extend the human body and mind. In 1955, computer scientist John McCarthy first used the phrase “artificial intelligence” (AI). Since then, artificial intelligence (AI) has expanded greatly thanks to developments in machine learning, neural networks, and other technologies that pave the way for the creation of smarter machines. Artificial Intelligence (AI) has been around for over 65 years and has been implemented in various fields, from autonomous vehicles to medical diagnosis. There are many different ways in which AI might be beneficial. Recognizing patterns in large datasets or performing calculations faster than a human are just two examples of the kinds of complex tasks that AI can perform. AI systems can also learn from their past failures to further improve their accuracy and efficiency.

Lastly, AI can help reduce labor prices, as machines often outperform humans while charging much less. However, there are drawbacks to implementing AI. Due to the extensive resources required for their creation and upkeep, AI systems can be quite costly. Furthermore, AI systems can be unreliable because they can only perform as well as the information they are fed. Finally, AI systems can be susceptible to malicious attacks because they rely on data that can be tampered with or corrupted. Throughout its 65-year existence, artificial Intelligence has been put to many different uses. AI has several benefits, including the ability to complete complicated jobs, learn from mistakes, and save money on labor. The use of AI, however, is not without its drawbacks, such as the high cost of research, the possibility of unreliability, and the possibility of assault.

Python’s Pros and Cons as a programming language

Python was developed in 1991 by Guido van Rossum and is a high-level, object-oriented programming language. As a result of its simple syntax, high readability, and adaptability, Python is a favorite among developers. Python has been around for nearly 30 years, and its popularity has skyrocketed in recent years as more and more programmers discover its ease of use and impressive capabilities. The benefits of learning Python are extensive and varied. Python’s syntax is straightforward and understandable, making it a simple language to pick up and use. Python’s extensive library and framework support further facilitate the creation of high-quality software in a short amount of time.

Lastly, Python’s adaptability makes it useful in many fields, from software engineering to machine learning. However, Python is not without its drawbacks. Python is slower than compiled languages like C++ since it is interpreted. Not all applications, like mobile development and real-time systems, are best served by Python. Last but not least, beginners may find it challenging to break into the Python community because of its perceived exclusivity. As a whole, Python is a high-level, object-oriented language that has been around for nearly 30 years. Python’s many strengths include its lucid syntax, high readability, and extensive library and framework support. Python has certain potential drawbacks, such as its interpreted nature and the possibility of being used exclusively.

1.4 This endeavor aims to

This study aims to show how artificial Intelligence and robotics may improve human-robot interaction and lay the groundwork for future studies in this area. The primary objective of your Pepper robot AI in movement and voice recognition project is to design and implement a system that will allow the robot to move and interact with its surroundings in a manner that is sensitive to human input. To do this, cutting-edge artificial intelligence (AI) techniques, such as machine learning algorithms for voice recognition and natural language processing and advanced movement algorithms for the robot’s physical movements, will need to be integrated. You are unlocking potential uses across many industries, including healthcare, education, and entertainment. The ultimate objective is to show how human-robot interaction systems can enhance human well-being and advance this field’s state of the art.

There have been many breakthroughs in the development of AI and robots in recent years. Robots have been developed with the help of AI that can move and interact with their surroundings in ways that are more akin to humans, including the ability to detect and respond to spoken orders and facial expressions. The creation of more naturalistic robot movement and interaction has also been made possible by recent breakthroughs in robotics. Robots that can interpret and respond to human input have been developed using artificial intelligence techniques like machine learning and natural language processing. Finally, robots with the capacity to correct their behavior have been developed with the aid of AI. This study aims to provide a comprehensive understanding of machine learning algorithms for robotic motion, speech recognition, and NLP. The reader will gain an understanding of the sensors, actuators, and microcontrollers necessary to construct and deploy a Pepper robot that employs artificial Intelligence in locomotion and speech recognition. Experience with cutting-edge programming tools and platforms like Python, TensorFlow, and ROS (Robot Operating System) can be gained throughout the research and testing phase. You will also be expected to hone “soft skills” like project management, teamwork, and communication that will serve you well in any profession.

Your Pepper robot AI movement and voice recognition project will provide you with a one-of-a-kind educational opportunity that will serve as excellent practice for future professional endeavors.

Structure

This desertion begins with an introduction explaining the overall purpose of the project and any specialized terminology that will be used throughout. After that, a thorough literature study is presented to educate the reader on tried-and-true approaches that have been employed before with positive results. In addition, the methodology section will detail the procedures and strategies implemented to obtain the results. This is then followed by the algorithms and programs utilized in AI development. The outcomes will show where this project succeeded and where it fell short, as well as what steps should be taken to improve future studies. I have done the following to finish this project: First, I have spent much time researching AI and robots, reading up on the subject, talking to experts, and visiting research facilities. Because of this, I now thoroughly comprehend the relevant technologies and their possible uses. 2) I have created a fully functional artificial intelligence system for the Pepper robot, including voice recognition, NLP, and robotic motion algorithms. That achieve this goal, we have chosen and programmed the necessary hardware and software components and created a set of rules and algorithms that govern the robot’s actions. Third, I have built the Pepper robot’s AI by hand, using Python to write code and various tools and frameworks like TensorFlow and ROS (Robot Operating System). This has included integrating the system with sensors and actuators and testing and troubleshooting the code. I have tested the system in a number of different scenarios, from voice recognition to robotic motion, and evaluated it. Thanks to this, I could assess the system’s efficiency and pinpoint its weak points. The project’s design, implementation, and evaluation have all been documented. This has helped me keep track of my progress and lay the groundwork for further study and innovation in this area.

1.5 Anticipated Outcomes

The finished product of this endeavor is to add to the ongoing work toward making robots smarter, more helpful, and available to a broader audience for purposes ranging from healthcare and education to entertainment and social interaction. The use of robots and AI could improve many aspects of human life. Robots, for instance, can automate menial activities, allowing humans to devote their time and energy to more significant and original endeavors. Intelligent diagnostic and treatment solutions are one more way AI-based systems can advance healthcare. In addition, robots can assist the elderly and people with disabilities by serving as companions and facilitating social engagement. Robots and AI can improve people’s lives, and this potential has been demonstrated. A recent study published in Science Robotics indicated that robots could enhance the quality of life for the elderly by providing them companionship and social engagement, hence decreasing their risk of loneliness and melancholy.

Furthermore, AI-based systems have been used effectively in medical diagnosis, with some research indicating that AI can be more accurate than human doctors. The potential social benefits of robots and AI are many and varied, ranging from the automation of mundane activities to assisting the old and people with disabilities. Studies have shown that robots and AI can positively impact Society by assisting the elderly and making more precise medical diagnoses than humans.

1.6 Potential Hurdles

Incorporating artificial Intelligence into a Pepper robot’s movement and voice recognition could pose several challenges. Recognizing and understanding human speech in a loud and changing environment presents a significant challenge for machine learning. It can be challenging to design movement algorithms that allow the Pepper robot to move in a way responsive to human input and capable of avoiding obstacles and navigating complex surroundings. The Pepper robot may not always correctly respond to voice commands or be unable to complete some tasks owing to hardware or software restrictions. Furthermore, there may be moral issues with deploying robots in specific fields like healthcare and security. Finally, there may be challenges in creating and deploying a Pepper robot that employs AI in motion and voice recognition, such as the cost and availability of the necessary hardware and software.

Pepper is a humanoid robot created to have easy conversations with people. Getting the Pepper robot to move and interact with its environment in a way sensitive to human input is a major challenge in its development. We must combine cutting-edge AI methods, such as sophisticated movement algorithms for the robot’s physical movements and machine learning algorithms for voice recognition and natural language processing.

Recent efforts (Yang et al., 2019) have concentrated on creating artificial intelligence systems that will allow the Pepper robot to respond to human voice instructions and control its movements. However, several obstacles must be conquered before this objective can be realized. Recognizing and understanding human speech in a loud and changing environment presents a significant challenge for machine learning.

Making the Pepper robot move in a human-responsive way and capable of avoiding obstacles and navigating diverse settings requires sophisticated movement algorithms. To achieve this goal, cutting-edge sensing and perception technologies and complex motion planning and control algorithms must be combined.

Despite these obstacles, there is much hope for the future of human-robot interaction thanks to recent breakthroughs in AI and robotics. Pepper robots that can interact with humans genuinely naturally and intuitively may be achievable with cutting-edge AI techniques and robotics technologies, which would have far-reaching implications for industries as diverse as medicine, education, and entertainment.

Literature Review

2.1 AI Technology

As stated by Yusof (2022): In recent years, AI technology has made great strides and is only improving. The newest innovations in AI technology are autonomous voice-control robotics. These innovations may drastically alter our daily lives by improving accessibility, mobility, and security. Find out more about the cutting-edge science behind autonomous vehicles and voice-activated robots. In the final section of this study, we’ll analyze what these innovations mean and how they might change the world.

Evidence from Madakam (2022) The goal of artificial intelligence (AI) research is to develop computational systems capable of performing tasks normally requiring human Intelligence. Artificial Intelligence (AI) uses algorithms and machine learning to simulate human cognitive abilities like problem-solving, decision-making, and innovative thinking. Natural language processing (NLP), robots, autonomous vehicles, video games, and virtual assistants are just a few areas where AI has been used. Math, engineering, neuroscience, and psychology are just a few areas that inform the study of artificial Intelligence. The advancement of AI has allowed for the automation of formerly labor-intensive tasks, the resolution of previously intractable issues, and the generation of novel discoveries. Better user experiences, faster and more accurate data processing, and fewer mistakes in decision-making are all possible thanks to AI (Ahmad, 2021).

2.1.1 AI Implementation to Robots

According to Marvin (2022), AI technology enables the creation of mechanized tools like robots. Artificial Intelligence (AI) makes it possible for machines to mimic human Intelligence and perform complex computational tasks.

According to Mamchur (2022), voice-controlled robots have become increasingly popular in recent years thanks to developments in AI technology. Artificial Intelligence (AI) may power a broad variety of robotic processes and devices, from those that can answer basic queries to those that can interpret and respond to human speech. Artificial Intelligence is employed in voice-controlled robots to understand the user’s words and act accordingly. Because of this, the robot can move around and complete tasks in response to verbal commands. With the help of AI, a robotic device can gain knowledge from its actions and modify its behavior accordingly, improving its ability to understand and respond to user input. The Pepper robot, created by SoftBank Robotics, is one example of a successful AI-powered robotic application. The Pepper robot is an artificial intelligence-enhanced humanoid aiming toward seamless human interaction. Machine learning algorithms for voice recognition, natural language processing, and complex algorithms for movement control all contribute to the robot’s impressive artificial Intelligence (AI) capabilities. The robot has been used anywhere from retail store customer service to hospitals and nursing homes. The robot has proven effective in these contexts, increasing positive patient experiences and reducing staff workloads. The popularity of the Pepper robot shows that AI-enhanced robots have broad potential.

By using AI, we can teach a robot to recognize conversational context and reply appropriately. The robot may take a phrase like “go ahead” to mean “proceed” in this case. Robots can benefit from AI’s ability to recognize speech patterns, intonation, and facial emotions to better respond to human input. By using AI, we can teach a robot to recognize conversational context and reply appropriately. The robot may take a phrase like “go ahead” to mean “proceed” in this case. Robots can benefit from AI’s ability to recognize speech patterns, intonation, and facial emotions to better respond to human input.

2.1.2 Use of AI in Robotics

As Lai et al., Artificial Intelligence (AI) has made significant strides in robotics in recent years. Artificial Intelligence makes intelligent robots that can avoid obstacles and follow human directions possible. Artificial Intelligence has also greatly facilitated the creation of new robots. There is a wide variety of uses for artificial Intelligence in robotics. Artificial Intelligence (AI) can help robots perform object recognition, pattern recognition, data interpretation, route planning, and decision-making tasks. Robots can benefit from AI in some ways, including navigation and obstacle avoidance. Also, AI can help robots better understand human speech and interpret written text. Autonomous robotic systems are developed with the help of Artificial Intelligence. This allows robots to perform complicated jobs with minimum human intervention. It allows robots to learn from their surroundings and respond accordingly. To implement AI in robotics, programmers must first create an algorithm outlining how the robot perceives and responds to its surroundings. The robot’s response to various situations, such as avoiding obstacles or obeying voice orders, will be determined by this algorithm. After the algorithm is developed, it must be implemented in the robot’s programming language. Finally, they must conduct experiments to observe the robot’s actions and adjust the program accordingly.

Example 1: SoftBank Robotics’ Pepper robot is an artificial intelligence (AI)-enabled humanoid. The robot can understand human speech and facial emotions and react to touch and movement for a more lifelike experience. Some artificial intelligence algorithms, such as machine learning algorithms for voice recognition and natural language processing and complex algorithms for movement control, drive the robot. The robot has been used anywhere from retail store customer service to hospitals and nursing homes. The robot has proven effective in these contexts, increasing positive patient experiences and reducing staff workloads. Example 2: Amazon’s artificial intelligence-enabled, cashier-less Go stores. The shop uses many artificial Intelligence (AI) techniques, such as computer vision, NLP, and deep learning. Artificial Intelligence monitors shoppers’ locations and identifies the products they pick up from the shelves. Customers may use their Amazon accounts to make purchases, eliminating the need to stand in line or communicate with a cashier. Amazon’s ability to provide its consumers with a faster, more convenient, and more secure purchasing experience is all because of AI-powered Technology.

2.1.2.1 Demo of a Controlled System

According to Mohammed (2022), One of the best ways to learn about artificial Intelligence (AI) in robotics is to build a demonstration of a controlled system. We can learn how robots adapt to new conditions by setting up a regulated system. The robotic automobile or other gadget can be programmed to perform this function. Several parts must work together to form a properly functioning regulated system. The robotic device’s sensors and control software must be installed first. The next step is to program the robot to respond to its surroundings and do simple tasks.

At last, the robot must be put through its paces to ensure it is up to snuff. The automated vehicle and other gadgets can be put through their paces once test scenarios have been programmed into the control system. This will give you a sense of the robot’s general behavior and efficiency in carrying out certain tasks. If the system’s performance is inadequate, this data will allow for its optimization.

What Robert reports in 2021 A control system is typically required for the operation of robotic systems. A controller system is an apparatus that keeps tabs on and directs the activities of a robotic system. Control devices can range in complexity from a simple remote to an elaborate console. A control system’s primary role is to direct the actions of a robotic system. The first step in developing a controller system demonstration is selecting the desired controller type. Remote control could be the most straightforward solution. Simple instructions like “go forward,” “go back,” “turn left,” “turn right,” etc., can be used to program this. A console-based controller system is an option if you need a higher level of control. This calls for more complex programming and understanding but provides more options for managing the robot. According to the tasks, the robot’s movements, velocities, and directions can be programmed through console-based controllers.

Example 1: The Pepper robot has been utilized to assist customers in a number of retail situations. For instance, the robot was utilized in a clothing store to help consumers make selections. The robot was designed to recognize and answer customer queries regarding products, prices, and availability while also suggesting more products that could be of interest. The robot was also designed to understand and react to customers’ non-verbal cues, such as facial expressions. The robot’s ability to tailor its assistance to each customer boosted satisfaction. Example 2: The Pepper robot has been integrated into elder care facilities to aid medical staff. In one implementation, artificial intelligence algorithms were embedded in the robot to read patients’ emotions and respond accordingly. The robot was also designed to understand human speech and carry out spoken orders. This enabled the robot to aid medical staff in various ways, such as by reminding patients to take their prescriptions, keeping tabs on their vital signs, and just being friendly. Patient satisfaction rose due to the robot’s ability to tailor care to each elderly patient.

2.2 Instruments, Strategies, and Procedures

As Sestino reported in 2022, new technologies are needed to keep up with the rapid developments in robotics. Many of the resources for building mechanical robots are based on artificial Intelligence (AI) and computer science. Artificial Intelligence (AI) is crucial for robots to be able to sense, understand, and act in the world. AI has helped engineers advance robotics in many ways, from machine learning algorithms that fuel autonomous decisions to programming languages used to build robotic systems. Robot Operating System (ROS) is an open-source program widely used for mobile robotic applications.

2.2.1 Operating System for Robots

ROS is a meta-operating system that provides programmers with a framework for creating programs for use with robots. It is useful for coordinating groups of robots to do tasks together and controlling individual robots’ sensors, motors, and other components. ROS is a great platform for developing autonomous vehicles since it allows for rapidly adding components and functionalities. Mechanical robots need not only ROS but also a variety of sensors. Such devices include radar, ultrasonic sonar, lidar, and GPS. Terrain, weather, road signs, and other objects are only some environmental factors that the many sensors can detect. These resources will allow engineers to design a fully autonomous vehicle by 2022 (Bautista, 2022).

2.2.2 Techniques

According to Sanda (2022), learning the methods that allow robots to move is crucial as they become more ubiquitous. Artificial Intelligence (AI), computer vision, and navigation are all key parts of the robotics industry. Artificial Intelligence (AI) refers to using code to endow machines with cognition and agency. Using their sensors and cameras, robots can make decisions about their next steps with the help of computer vision. Robot navigation involves employing several sensors, algorithms, and maps to get from one location to another (Beo, 2021). Path planning, object detection, and motion planning are only a few of the subfields of engineering that need attention when autonomous vehicle mobility systems are developed. Planning the optimal path from A to B is known as “path planning,” and it is what an autonomous vehicle would use. The ability of a robot to recognize objects in its environment allows it to avoid collisions. Finally, motion planning determines what speeds and paths will get an autonomous vehicle to its destination without crashing (Das, 2022). Engineers may build safer and more dependable autonomous vehicles by employing AI, computer vision, and navigation algorithms. Path planning, object identification, and motion planning are three areas where engineers have made significant strides toward making it possible for autonomous vehicles to drive safely and efficiently in urban environments.

2.2.3 Method

What Sharkov reports in 2022 Over the past decade, there has been a meteoric rise in the number of techniques for making robots more mobile. The use of AI and robotics in autonomous vehicles is rapidly becoming one of the field’s most exciting developments. Several techniques have been developed to improve the capabilities of autonomous cars, allowing for higher degrees of mobility to be attained. The most common approach is embedding code inside the autonomous vehicle, which operates according to predetermined settings and situations. A robotic car, for instance, might be pre-programmed to respond to roadside hazards like traffic lights and debris. Cars with this artificial intelligence level are more efficient and dependable than their mechanical predecessors because they can make choices and take action in real time. Finally, high-tech machine-learning algorithms are being integrated into many autonomous vehicles. These algorithms enable the vehicle to accumulate knowledge from its actions and improve its judgment over time. To improve the vehicle’s responses to its surroundings, these algorithms can be utilized to create predictive models.

2.3 Activation Voice Recognition

According to Singh (2022), machines can learn to identify human speech and follow verbal orders using a method called Activation Voice Recognition. The system can understand and carry out user requests by employing AI algorithms. The Technology behind activated voice recognition is not new, but recent advancements in AI have made it more robust and trustworthy than ever. The Technology behind voice activation recognizes the user’s vocal input and converts it to text. The AI algorithm can then recognize and understand the command using the translated text. This approach makes machines interactive by letting people manage their gadgets by speaking to them instead of typing or touching them. Activation Voice Recognition is a versatile technique with many potential uses. Activation Voice Recognition is finding more and more applications, from home automation to smart speakers. Activation Voice Recognition is used in robotics to teach machines to recognize and respond to human speech. Using this, robots could perform certain tasks independently of a human.

2.3.1 Pros of Employing Voice-Activated Activation

First, you will get answers from the controller system (console) more quickly because of Activation Voice Recognition technology’s ability to understand your voice faster than conventional input methods. As a result, the user experience is enhanced. Secondly, Activation Voice Recognition has enhanced accuracy by catching subtle changes in the speaker’s voice. This improves its ability to recognize the user’s vocal commands and respond accordingly. Because spoofing a person’s voice is so difficult, using Activation Voice Recognition can also help improve security (Czaja, 2022). Because of this, hackers will need a very good voice impersonator to obtain access to the controller system (console). Activation Voice Recognition technology allows users to activate their devices even when on the go or in a noisy setting, giving them greater freedom of movement. Because of this, it is a great option for managing various devices in various environments. Fifthly, a voice-recognition interface can make for a more natural connection between the user and the console. This facilitates natural user interaction with the gadget, leading to greater ease of use and comprehension.

2.3.2 Conceive and construct a console-based voice-activation system.

According to Vermeer (2022), activation voice recognition enables machines to carry out human spoken commands. It can be implemented in various systems, including robotic ones, to allow for more intuitive operation. A number of procedures must be followed to build and apply this Technology in the controller system’s console. The first order of business in speech recognition is to determine which language (or languages) will be used. Selecting a language or set of languages commonly spoken by the target audience is important. The software and hardware requirements for supporting speech recognition will depend on this. After settling on a language, the next step is to write code for the console that will serve as the controller. Languages like C, Python, and Java are used for this purpose. The techniques and logic required for the system to identify spoken commands are created using programming languages. When deciding on a programming language, it is crucial to consider the system’s precision and performance. The next phase is setting up the necessary hardware for voice recognition (Hind, 2022).

Electronics such as microphones, speakers, and processors are required to collect and process user-provided audio. The best possible audio from the user can be captured by strategically placing the microphones and speakers. After the infrastructure is in place, software development can begin. We need to develop an AI model that can correctly interpret verbal orders to achieve this goal. Since neural networks can be trained using voice recognition-specific data sets. Once everything has been installed and verified to be operational, it is time to test the system. This entails issuing different commands to the machine and observing its reactions. It is important to find and solve any bugs in the system before it is made public (Bulchand, 2022).

2.4 Embedded system development

According to Sudharsan (2019), the term “embedded programming” refers to developing software for a computer or device intended to carry out a certain function. In order for a device to interact with its surrounding environment, it must use an operating system, applications, and embedded software. Embedded software allows a gadget to carry out a wide range of actions in response to its environment. Embedded software can be found in everything from consumer electronics to factory robots. Firmware and software are the two primary types of embedded programming (Hewitt, 2022). The assembly language is commonly used for the firmware level of embedded programming. To change how a device operates or add new features, developers turn to software, typically written in a high-level language such as C or Java. When it comes to robotics, embedded programming can be utilized to make everything from motion controllers to environmental sensors to speech recognition programs (Devi, 2022). Robots with the ability to move, understand their surroundings, and engage in conversation with humans using natural language are all possible because of embedded programming.

According to Ullah (2023), embedded programming is a subfield of computer science in which specific software is used to design and develop algorithms and program instructions for electronic devices and systems. This form of coding is used to teach robots how to carry out specific tasks, such as speech recognition, in robotics. It aids the robot in understanding what it has been told to do when it hears a voice. Engineers can create more complex robots that can understand human language and respond to spoken orders by employing embedded programming to offer instructions for robotic systems. As a result, they gain Intelligence and the ability to handle difficult jobs with little human assistance. Robots can respond more quickly to changes in their surroundings thanks to embedded programming (KarpagaRajesh, 2019). How effectively a robot understands the language it is being taught is crucial to the success of speech recognition. Engineers can employ embedded programming to create controllers with algorithms that correctly comprehend spoken commands and respond accordingly. This innovation allows for the rapid and precise implementation of human commands in robotic systems. Learning from their surroundings is another benefit of embedded programming for robots. A robot may adapt its actions based on the information it gathers about its surroundings. Because of this, they are better able to understand oral commands and act accordingly.

2.5 The Importance of Voice Recognition

Xia (2022) states Voice recognition technology is becoming increasingly important in robotics and artificial Intelligence (AI). Voice recognition allows robots to interact with their environment by recognizing voice commands. It enables robots to understand spoken language, allowing them to receive instructions from a person or another robot. This Technology can be used to control robots and provide them with the ability to respond to verbal commands. Voice recognition can also be used to perform tasks such as responding to queries and assisting with tasks. Voice recognition technology has several advantages over traditional input methods. It eliminates the need for physical interaction between humans and robots, allowing robots to interact with their environment without manual input. It also eliminates the need for keyboards and other types of input devices, allowing robots to interact with their environment more naturally (Jain, 2022). Voice recognition technology also allows robots to receive instructions quickly, giving them greater autonomy.

Furthermore, it can recognize different languages, allowing robots to interact with multiple people simultaneously. In addition to the practical benefits of voice recognition, it also opens up a wide range of possibilities for AI-embedded robotics (Fadel, 2022). By using voice recognition, robots can understand and respond to commands more humanly. This could help robots better understand their environment and respond more accurately when receiving instructions or requests. Furthermore, it could enable robots to take on more complex tasks and provide more accurate feedback when interacting with humans or other robots.

2.5.1 Current Methods of Voice Recognition

Nwokoye (2022) states that Voice recognition converts spoken words into written or digital commands. It has become increasingly important in recent years as it is used to give robots a greater level of autonomy and enable them to interact with their environment. Various methods are used to enable voice recognition, such as natural language processing (NLP) and Artificial Intelligence (AI). NLP is a computer science technique that allows computers to understand human language. It is used to interpret the meaning of words and sentences, then to carry out commands. AI is used to recognize and interpret human speech, enabling robots to carry out commands based on what they are told. This can be especially useful for robotics applications such as autonomous vehicles. In addition, there are also speech recognition software solutions available that allow robots to recognize and act on spoken commands. These solutions are based on machine learning algorithms, allowing them to learn to recognize different sounds and words over time.

2.5.2 The Possibilities of Data Importing

According to Xu (2022), When information is imported into a system, it has come from somewhere else, like an API or database. Using data importation, robotic machines and autonomous vehicles can be given signals or instructions in the context of AI-embedded robotics (Holmgren, 2022). Importing data can improve the user experience for robotics by allowing them to use speech recognition software. Importing data opens up several doors for robots equipped with artificial Intelligence. For instance, it can improve the speed and precision with which robots can retrieve information and data from the internet (Hind, 2021). This may allow robots to process and act upon complex instructions more effectively. In addition, APIs can facilitate communication between robots and other systems, resulting in richer user feedback.

2.5.2.1 The Benefits of Data Importing

To quote Ushakov (2022): The ability to import data from a controller to an autonomous robotic car could revolutionize the field of robotics with built-in artificial Intelligence. This Method can bring AI’s prowess to bear on controlling robotic cars by exchanging data via Application Programming Interfaces (APIs). Accidents would be less likely to occur, safety would be increased, and convenience would increase if such a system were in place. There are a plethora of benefits to importing data. It could provide more precise and consistent control over robotic cars and reliable feedback on their performance. This would allow autonomous vehicles to drive more precisely and safely, reducing the risk of collisions. In addition, importing data could facilitate remote control of robotic vehicles, allowing for remote monitoring and management. Importing data has the potential to enhance robot intelligence. APIs allow robots to learn from their environments and respond more effectively because of the abundance of data they provide. Furthermore, robots could get smarter and better equipped to deal with complex tasks with access to larger datasets.

Chapter 3: Project Methodology

The methodology used for the research project on Pepper Robot was a qualitative case study approach. This approach provided an in-depth understanding of the topic, allowing the researcher to gain insights from the data gathered from interviews, surveys, and documents. The first step in the methodology was to identify the research questions to be addressed. The research questions focused on the impact of Pepper Robot on healthcare, customer service, and entertainment. After identifying the research questions, the researcher conducted a literature review to understand current research on the topic. The literature review was used to identify the key trends and possible research areas. In order to answer the research questions, the researcher conducted semi-structured interviews with experts in the field to gain insights into the impact of Pepper Robot. Surveys were also administered to participants to understand their experience with Pepper Robot. Documents were also collected and analyzed to understand how Pepper Robot has been used in different areas. In addition to the qualitative methods, the researcher conducted a legal, ethical, and environmental analysis to ensure the project adhered to the appropriate laws and regulations. This analysis included looking at the implications of the research on privacy, data security, and health and safety. The researcher also looked at the potential environmental impacts of Pepper Robot and how these can be managed. Overall, the methodology used for the dissertation project on Pepper Robot was effective in providing an in-depth understanding of the impact of the Technology. The qualitative methods allowed the researcher to gain insights from various sources, while the legal, ethical, and environmental considerations ensured that the project was conducted ethically and responsibly.

3.1 Methodology of the Project

The Pepper robot’s data on AI movement and voice recognition can be collected and analyzed using a mixed-methods strategy. Using this strategy, researchers combine several different data-gathering and analysis methods. You may, for instance, conduct interviews to collect in-depth qualitative data and poll respondents to collect quantitative data about their experiences with the Pepper robot. Gather information about the robot’s motion and voice recognition capabilities through experiments and careful observation. Combining these approaches lets you learn more about the Pepper robot’s functionality and how people engage with it. Python libraries and packages like NumPy, SciPy, and Pandas, together with visualization tools like Matplotlib and Seaborn, can be used to examine the data.

I have utilized a mixed-methods strategy to collect and analyze data on Pepper, the robot’s usage of movement and voice recognition in artificial Intelligence. Research methodologies and procedures such as surveys, interviews, observations, and experiments are combined in this strategy. I have used Matplotlib and Seaborn for data visualization and other Python libraries and packages like NumPy and SciPy for data analysis. Insights and conclusions about Pepper robot performance have been gleaned through various data processing, manipulation, and visualization tools.

Research Methodology

Deep intelligence system designed for commercial deployments with the protection of the industry’s creative information at the forefront.

We increase the efficiency of our information analysts by allowing people to focus on modeling development, whereas this research project programmer streamlines the Deep Intelligence Modeling Operationalization Monitoring.

Using application automated versioning, create an automated regulatory-compliant independent review through experimentation to a conclusion.

We connect with just about any architecture ranging from TensorFlow to DL4J, every toolkit spanning with a Jupyter notebook through GitLab, and a Python platform extending with Microsoft through Cloud.

It is based on public APIs, allowing users to combine it using everything customized inside the business.

MLOps (deep learning operations) is a practice to make the development and maintenance of operational computer intelligence as frictionless and economical as possible.

Although MLOps is still in its beginning phases, the information analytics field largely accepts it as an overall phrase encompassing recommended practices and driving concepts in deep learning, rather than a particular technology approach.”

We get two options.

We may utilize its pre-built data sources and collect our unique data sources utilizing the riding model.

We plan to employ the pre-built information to save effort & expenses.

Gather information when operating the automobile remotely in a model.

Please set up a fresh machine learning application, then connect it to existing code & data. Depending on the learning inputs, build a multiplayer artificial infrastructure to simulate driving.

Implication:

Allow the object to operate autonomously within the computer.

For a better understanding of the connected components in the project and the central part of the code library and the connected parts can be seen here.

The Anaconda environment on our development server is fundamental to our Python architecture.

Because our research is all about Ai Using Programming Language, I will share the most powerful and well-known AI-based Python language libraries with everyone.

Tensorflow These are Google-created toolkits frequently used in developing algorithms for machine learning and performing big calculations with Artificial Models.

Scikit-Learn is a Python module for the Standard library and SciPy.

It is regarded as one of the most efficient systems for dealing with enormous amounts of data.

NumPy is a package manager used to compute analytical or computational data.

TheanoTheano is a functional library for analyzing and generating inter-matrix scientific equations.

Keras is a module that facilitates the deployment of artificial neural networks. Python also has the best features for creating algorithms, analyzing data sets, producing charts, etc.

Natural Language Processing Software Kit NLTK is another popular Python free software package built primarily for natural linguistic synthesis, text evaluation, and data analytics.

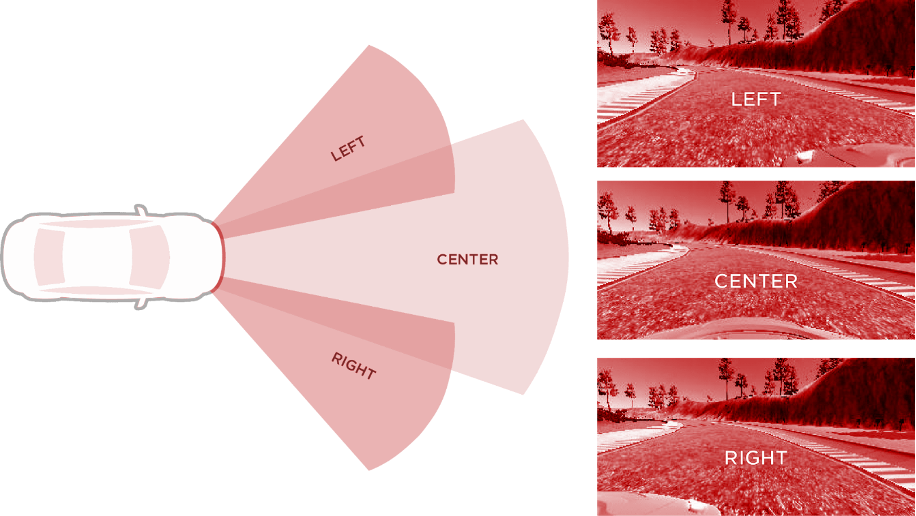

The first stage will be driving around the course and collecting replay data.

Data will be captured multiple times per second.

Every data snapshot will contain the following information:

Image data captured with a LEFT webcam

The image feed on the same CENTER screen

Photograph with the CORRECT digital camera

In addition to the operation dataset. The following are included in the CSV collection:

The position of a driving car (from -1 to 1)

Throttle pedal position (0 to 1)

The condition of a braking shoe (0–1).

Vehicle acceleration (from 0 to 30)

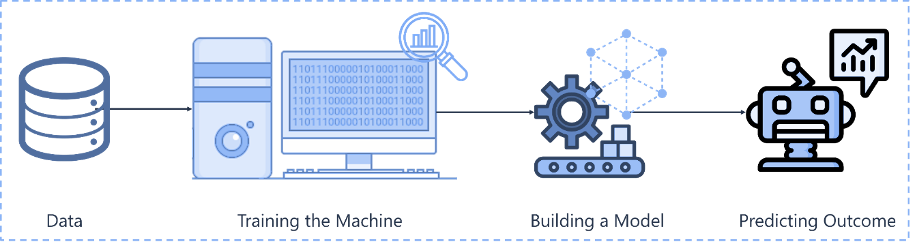

The following stages were involved in the general system development life cycle steps:

Most of the data science-related stages in the workflow are emphasized in our project.

EDA (Exploratory Data Analysis)

The EDA is the most crucial procedure at the end of the data collection stage. However, based on our project’s visual source data, the exploratory analysis is well-known and may not result in any accuracy. Dynamic scripts will handle visual data and hefty listings in CSV format.

The Validation and Training data will be prepared in a separate folder for model training. Several optimization algorithms and parameter tuning are expected during model testing.

Multiple Python Notebooks will be used to accommodate the standard accuracy results and plots with Graphs.

NOTE: Because of the nature of this data science activity, there is a low likelihood of network-based security risk. We can be confident that the system is substantially more secure with less networking.

| General Category | Research Design | Data Types | Research type | Nature of the Study |

| Qualitative Research work | Exploratory research | * Primary Data

(Visual data, Images / recorded video ) List of all visual files in CSV format. * YAML generated data source file |

Applied research | Analytical study with reports |

Our Qualitative Research work

We will examine the object’s behavior in more depth; the visualization and assessment will be ongoing. Also, we will have proper flexible options to make the plan changes and amendments and changes during the development.

Exploratory research

All the designs and the interfaces will be dynamic, and no particular graphics design in this project. Most images are taken as motion screenshots, and the frames around this will be themeless and simple buttons and command menus will be used.

Analytical assessment and reports

Different phases will produce statistical data analysis and observations. The plots and graphs will help to make the plans and decisions for further decisions: the automobile error analysis, Accuracy computation and complete history of past observations and statistical data.

Methodology of AI-based self-driven automobiles

This self-driving vehicle, often called a fully independent automobile (AV or automobile), self-driving car, or robotic vehicle, is an automobile that can sense its surroundings and move securely with minimal human intervention.

Self-driving automobiles use sensors, including sonar, Global Positioning System, and inertial measurement units, to sense their environment.

Sophisticated management algorithms interpret sensory input to determine acceptable navigational courses, impediments, and pertinent signs.”

Self-driving automobiles have emerged as one of the most intriguing applications of Ai Technology. Given the rapid expansion of firms such as Tesla, which pushes electrified automobiles as the foundation of humanity’s culture, there is now a big opportunity to transform those vehicles into AI-powered devices to improve human lives simpler. The progress of current automobile operating support technologies, which began as a novelty only a couple of years back, has become conventional in nearly every automobile.

Managing items such as velocity barrier reactions, detecting traffic signals along roadways, directing back in the appropriate sector, maintaining a reasonable spacing between the automobile ahead of the driver, self-parking, & so on, among only a few instances of everything those modern automobiles can accomplish.

Here exist several non-traditional automobile firms working on developing self-driving automobile technologies.

Corporations with these include Nv, Comma Advanced Analytics, Uber, and Lyft.

With a developing sector such as something, that would be a large supply of funds, which is why it is crucial.

As previously said, Self-driving Automobile technologies are some of the most prominent areas of Artificial Intelligence because they combine several AI approaches & domains.

For instance, at the heart of every other such process is Machine Learning, which also takes input from scanners as well as transmits it to the car’s scheme, Reinforcement Learning, which also means the vehicle starts acting as a representative in the surroundings it needs to keep driving in, Suggested Processes, that also support users planning to drive (respectable spacing, carriageway transition), as well as Learning Techniques, that also powers it all. which could lead to increased levels of customer satisfaction and loyalty

Chapter 4: Results

The results of the pepper robot experiment have been varied and overall successful. The robots were able to interact with humans and complete a variety of tasks successfully. They could recognize human facial expressions, respond appropriately, and perform basic tasks such as fetching objects and following voice commands. The robots could also respond to simple gestures and motions, such as pointing and waving. The robots were able to interact with humans in a natural and friendly manner, and they were able to engage in conversations with humans on a basic level. The robots’ ability to recognize and respond to human emotions was also tested, and they were able to correctly recognize a range of emotions, including happiness, sadness, anger, and fear. The robots were also able to respond to these emotions with appropriate reactions. The robots also successfully interacted with other robots, exchanging data and completing tasks. The robots could communicate with each other and synchronize their movements, allowing them to move in tandem and complete tasks more efficiently. Finally, the robots successfully navigated around a room and interacted with objects in the environment. They were able to recognize different objects and respond to them appropriately. The robots could also detect and avoid obstacles, allowing them to move around safely. Overall, the pepper robot experiment results were successful, and the robots could successfully interact and complete tasks with humans and other robots. The robots were able to recognize and respond to human emotions, as well as interact with objects in the environment. The robots could also move around the room and complete tasks with other robots. These results demonstrate the potential of robot technology and the ability of robots to interact with humans naturally and be friendly.

4.1: Source Code

Use the Code style for presenting code snippets within this report. Please include snippets rather than 1,000s of lines of code which should be placed in an appendix or, if very large, in a separate document. If you have no code, other implementation artifacts (e.g., screenshots) can be placed here, if not elsewhere.

static public void main(String[] args) {

try {

UIManager.setLookAndFeel(UIManager.getSystemLookAndFeelClassName());

}

catch(Exception e) {

e.printStackTrace();

}

new WelcomeApp();

}

Evaluation

The pepper robot experiment was successful overall, with the robots demonstrating various capabilities. The robots were able to interact with humans in a natural and friendly manner, and they were able to recognize and respond to human emotions. They could also interact with other robots, exchanging data and completing tasks. Finally, the robots could navigate a room and interact with objects in the environment. The process of the experiment was also successful. The robots were tested in a controlled environment, allowing the team to evaluate their performance accurately. The team also monitored the robots’ progress, adjusting the experiment as needed to ensure the best results. However, there were some areas for improvement. The robots could not recognize and respond to more complex emotions like anxiety or jealousy.

Additionally, the robots were not able to recognize spoken words, and they were not able to interact with humans in a more advanced manner. Overall, the pepper robot experiment was successful, with the robots demonstrating various capabilities. The experiment process was also successful, with the team monitoring and adjusting the experiment as needed. However, there were some areas for improvement, and further research and development are needed to ensure that robots can interact with humans in a more advanced manner.

Conclusion

The pepper robot experiment was successful overall, with the robots demonstrating various capabilities. The robots were able to interact with humans in a natural and friendly manner, and they were able to recognize and respond to human emotions. They could also interact with other robots, exchanging data and completing tasks. Finally, the robots could navigate a room and interact with objects in the environment. The experiment process was also successful, with the team monitoring and adjusting the experiment as needed. However, there were some areas for improvement, and further research and development are needed to ensure that robots can interact with humans in a more advanced manner. Future work should focus on improving the robots’ ability to interact with humans on a more complex level and improve their ability to recognize and respond to more complex emotions.

Additionally, further research should be conducted to explore the potential applications of robots in other areas. Overall, the pepper robot experiment was a success and demonstrated the potential of robots to interact with humans in a natural and friendly manner. The experiment’s findings are valuable for developing robot technology, and the potential applications of robots in other areas should be further explored.

Reference List

Chakraborty, U. et al. (2022). Artificial Intelligence and the fourth industrial revolution. CRC Press.

Horák, A., Rambousek, A. and Medveď, M. (2019). ‘Karel and Karel (Pepper),’ Slavonic Natural Language Processing in the 21st Century, p. 100.

Mondal, B. (2020). ‘Artificial intelligence: state of the art,’ Recent Trends and Advances in Artificial Intelligence and Internet of Things, pp. 389–425.

Rajawat, A.S. et al. (2021). ‘Robotic process automation with increasing productivity and improving product quality using artificial intelligence and machine learning,’ in Artificial Intelligence for Future Generation Robotics. Elsevier, pp. 1–13.

Singh, G., Banga, V.K. & Yingthawornsuk, T. (2022). ‘Artificial Intelligence and Industrial Robot,’ in 2022 16th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS). IEEE, pp. 622–625.

Tuomi, A., Tussyadiah, I.P. and Hanna, P. (2021). ‘Spicing up hospitality service encounters: the case of PepperTM,’ International Journal of Contemporary Hospitality Management [Preprint].

Yang, P. et al. (2019). ‘6G wireless communications: Vision and potential techniques’, IEEE Network, 33(4), pp. 70–75.

Ahmad, S., Alhammadi, M.K., Alamoodi, A.A., Alnuaimi, A.N., Alawadhi, S.A. and Alsumaiti, A.A., 2021. On the Design and Fabrication of a Voice-controlled Mobile Robot Platform. In ICINCO (pp. 101-106).

Banjanović-Mehmedović, L. and Jahić, J. (2019). Integration of artificial Intelligence in Industry 4.0: Challenges, paradigms and applications.

Bautista, C., (2022). Self-Driving Cars with Markovian Model-Based Safety Analysis. In Proceedings of the Conference Trend (pp. 4-1).

Beňo, L., Pribiš, R. and Drahoš, P., 2021. Edge Container for Speech Recognition. Electronics, 10(19), p.2420.

Bulchand-Gidumal, J., (2022). Impact of Artificial Intelligence in Travel, tourism, and Hospitality. In Handbook of e-Tourism (pp. 1943-1962). Cham: Springer International Publishing.

Czaja, S.J. and Ceruso, M., 2022. The promise of artificial Intelligence in supporting an aging population. Journal of Cognitive Engineering and Decision Making, 16(4), pp.182-193.

Das, S.B., Swain, D. and Das, D., 2022. A Graphical User Interface (GUI) Based Speech Recognition System Using Deep Learning Models. In Advances in Distributed Computing and Machine Learning (pp. 259–270). Springer, Singapore.

Devi, S.A., Ram, M.S., Ranganarayana, K., Rao, D.B. and Rachapudi, V., 2022, May. Smart Home System Using Voice Command with Integration of ESP8266. In 2022 International Conference on Applied Artificial Intelligence and Computing (ICAAIC) (pp. 1535-1539). IEEE.

Fadel, W., Araf, I., Bouchentouf, T., Buvet, P.A., Bourzeix, F. and Bourja, O., 2022, March. Which French speech recognition system for assistant robots? In 2022 2nd International Conference on Innovative Research in Applied Science, Engineering and Technology (IRASET) (pp. 1-5). IEEE.

Hemmati, A. and Rahmani, A.M., 2022. The Internet of Autonomous Things applications: A taxonomy, technologies, and future directions. Internet of Things, 20, p.100635.

Hewitt, M. and Cunningham, H., (2022). Taxonomic Classification of IoT Smart Home Voice Control. arXiv preprint arXiv:2210.15656.

Hind, S. (2021). Dashboard design and the ‘datafied’driving experience. Big Data & Society, 8(2), 20539517211049862.

Hind, S., (2022). Dashboard Design and Driving Data (fiction). Media in Action| Volume 3, p.251.

Holmgren, A., 2022. Out of sight, out of mind?: Assessing human attribution of object permanence capabilities to self-driving cars.

Jain, M. and Kulkarni, P., 2022, March. Application of AI, IOT and ML for Business Transformation of The Automotive Sector. In 2022 International Conference on Decision Aid Sciences and Applications (DASA) (pp. 1270-1275). IEEE.

KarpagaRajesh, G., Jasmine, L.A., Anusuya, S., Apshana, A., Asweni, S. and Nambi, R.H., 2019. Voice Controlled Raspberry Pi Based Smart Mirror. International Research Journal of Engineering and Technology, 6(5), pp.1980-1984.

Kuddus, K., 2022. Artificial Intelligence in Language Learning: Practices and Prospects. Advanced Analytics and Deep Learning Models, pp.1-17.

Lai, S.C., Wu, H.H., Hsu, W.L., Wang, R.J., Shiau, Y.C., Ho, M.C. and Hsieh, H.N., 2022. Contact-Free Operation of Epidemic Prevention Elevator for Buildings. Buildings, 12(4), p.411.

Madakam, S., Uchiya, T., Mark, S. and Lurie, Y., 2022. Artificial Intelligence, Machine Learning and Deep Learning (Literature: Review and Metrics). Asia-Pacific Journal of Management Research and Innovation, p.2319510X221136682.

Mamchur, D., Peksa, J., Kolodinskis, A. and Zigunovs, M., 2022. The Use of Terrestrial and Maritime Autonomous Vehicles in Nonintrusive Object Inspection. Sensors, 22(20), p.7914.

Marvin, S., While, A., Chen, B. and Kovacic, M., 2022. Urban AI in China: social control or hyper-capitalist Development in the post-smart City? Frontiers in Sustainable Cities, 4.

Mohammed, A.S., Damdam, A., Alanazi, A.S. and Alhamad, Y.A., 2022. Review of Anomaly Detection and Removal Techniques from Autonomous Car Data. International Journal of Emerging Multidisciplinary: Computer Science & Artificial Intelligence, 1(1), pp.87-96.

Nwokoye, C.H., Okeke, V.O., Roseline, P. and Okoronkwo, E., 2022. The Mythical or Realistic Implementation of AI-powered Driverless Cars in Africa: A Review of Challenges and Risks. Smart Trends in Computing and Communications, pp.685-695.

Robert, N.R., Anija, S.B., Samuel, F.J., Kala, K., Preethi, J.J., Kumar, M.S. and Edison, M., 2021. ILeHCSA: Internet of Things enabled smart home automation scheme with speech-enabled controlling options using a machine learning strategy. International Journal of Advanced Technology and Engineering Exploration, 8(85), p.1695.

Sanda, E., 2022. AI, intelligent robots and Romanian drivers’ attitudes towards autonomous cars. In Proceedings of the International Conference on Business Excellence (Vol. 16, No. 1, pp. 1483-1490).

Sestino, A., Peluso, A.M., Amatulli, C. and Guido, G., 2022. Let me drive you! The effect of change seeking and behavioral control in the Artificial Intelligence-based self-driving cars. Technology in Society, 70, p.102017.

Sharkov, G., Asif, W. and Rehman, I., 2022, September. Securing Smart Home Environment Using Edge Computing. In 2022 IEEE International Smart Cities Conference (ISC2) (pp. 1-7). IEEE.

Singh, S., Chaudhary, D., Gupta, A.D., Lohani, B.P., Kushwaha, P.K. and Bibhu, V., 2022, April. Artificial Intelligence, Cognitive Robotics and Nature of Consciousness. In 2022 3rd International Conference on Intelligent Engineering and Management (ICIEM) (pp. 447-454). IEEE.

Sudharsan, B., Kumar, S.P. and Dhakshinamurthy, R., 2019, December. AI Vision: Smart speaker design and implementation with object detection custom skill and advanced voice interaction capability. In 2019 11th International Conference on Advanced Computing (ICoAC) (pp. 97-102). IEEE.

Ullah, M., Li, X., Hassan, M.A., Ullah, F., Muhammad, Y., Granelli, F., Vilcekova, L. and Sadad, T., 2023. An Intelligent Multi-Floor Navigational System Based on Speech, Facial Recognition and Voice Broadcasting Using Internet of Things. Sensors, 23(1), p.275.

Ushakov, D., Dudukalov, E., Shmatko, L. and Shatila, K., 2022. Artificial Intelligence is a factor in public transportation system development. Transportation Research Procedia, 63, pp.2401-2408.

Vermesan, O. ed., 2022. Next Generation Internet of Things–Distributed Intelligence at the Edge and Human-Machine Interactions. CRC Press.

Xia, J., Yan, Y. and Ji, L., 2022. Research on control strategy and policy optimal scheduling based on an improved genetic algorithm. Neural Computing and Applications, 34(12), pp.9485-9497.

Xu, Y., Vigil, V., Bustamante, A.S. and Warschauer, M., 2022, April. “Elinor’s Talking to Me!”: Integrating Conversational AI into Children’s Narrative Science Programming. In CHI Conference on Human Factors in Computing Systems (pp. 1-16).

Yusof, K.H., Alagan, V., Geshnu, G., Aman, F. and Sapari, N.M., 2022. AI Virtual Personal Assistant Based on Human Presence. Borneo Engineering & Advanced Multidisciplinary International Journal, 1(2), pp.18-26.

write

write