1.0 Knowledge Building

The COVID-19 outbreak in the United Kingdom has had substantial socioeconomic effects on the community, which must be acknowledged to motivate rapid governmental actions. School closures, movement restrictions, social gathering bans, and limitations on economic activities have all impacted the socio-economic status life of the United Kingdom, resulting in stress such as lost income and higher food insecurity, which has been aggravated by a locust invasion. Understanding the financial implications of the COVID-19 epidemic and identifying the most susceptible populations is crucial. Scientific proof policies and programmatic activities may be produced as a consequence of this understanding Gabry et al., (2019). However, a lack of up-to-date macroeconomic data stymies efforts to allocate resources wisely, such as when initiating social assistance programs. As a consequence, timely and frequent macroeconomic data are necessary to construct evidence-based interventions and aid in minimizing the COVID-19 crisis’s impacts.

The purpose of the COVID-19 Response Team Phone Survey is to fill in macroeconomic data gaps even while providing early evidence to motivate targeted policy and institutional initiatives. Facial expression surveys are no longer practical in the setting of the COVID-19 pandemic due to the threat of transmission and mobility constraints. Telephone surveys, on the other hand, are perfect for getting data fast and regularly Goddard et al., (2018). The World Economic Forum is trying to implement U.k and internally displaced people households contain essentially run by entrepreneurship, and formal businesses, in collaborative efforts with the U.k. of Statistical data, the United Nations Special Coordinator for Refugees, and investigators from the University of Southern California, San Francisco, to assess the influences of COVID-19 and better guide national policies in the U.k. The results and policy proposals for the first of three-collecting data rounds of the COVID 19 from UK households are reported in this note, combined with preliminary results for refugee households and microenterprises.

Thus, according to theories, lockdown measures may lead to both a drop and an increase in crime, particularly levels of violent violence, with some criminal offenses being more likely to increase and others being considerably more likely to decrease. This variable impact is supported by the empirical findings presented in this research brief, with various patterns emerging across countries and fraudulent practices in the short term when pandemic-related actions were undertaken. Numerous processes that have lowered or rampant crime, or matched opposite mechanisms with no noticeable changes, have most likely been driven by a range of policies and which was before situations. This video examines two sorts of criminal activity:

Data from 21 states demonstrate a wide range of patterns in the proportion of homicide victims documented when lockdown measures were implemented. When restriction restrictions were in place, the decline in murder trend in April 2020 in various nations was more than 25% more than the average reported in those months throughout the 2015–2019 period. However, once the precautions were lifted, the pre-lockdown pattern reappeared. In nations in Europe and other areas where data was available, homogeneous developments were obvious, but patterns in Latin America were highly diverse. As a result, drawing broad generalizations about the pandemic’s influence on the degree of deadly violence is challenging. Different levels of punitive laws imposed by governments, which was before economic circumstances, and the overall pervasiveness of particular archetypes of homicide, which in North America is often linked to criminal organizations and gangs, even though in Europe it is more strongly connected to personal and social and relatives’ violence, are all factors that could explain this homogeneity.

In today’s corporate environment, with such data being acquired via data analysis, we need the means to visualize that data so that we can understand it. Visualization tools help us understand data by presenting it in a graphical structure, including charts or diagrams. This makes it easier to spot trends, correlations, and outliers in large data sets by making the data easier natural to comprehend for the human mind.

By sharing information in the most effective manner possible, data visualization may help you in any business or job. Since it takes raw data, analyses it, and distributes it so that conclusions can be drawn, data visualization is an important part of the business analytics process. Computer scientists working in advanced statistics are developing learning algorithms to better arrange vital data into representations that are simpler to consume and assess. Visualization software, in particular, makes use of visual data to communicate information in a general, quick, and effective way Goddard et al., (2018). This technique may help firms figure out which areas need improvement, what variables influence customer happiness and discontent, and what to do about specific items. Stakeholders, business owners, and decision-makers may use graphical data to better estimate sales, earnings, and future growth.

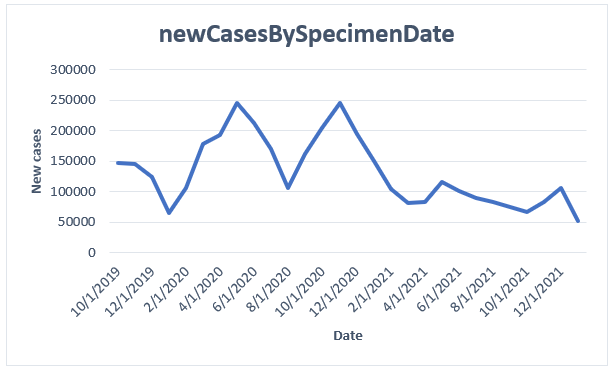

Trend New cases for covid 19

Plot (datasetuk$newCasesBySpecimenDate,datasetuk$date,main=”GDP trend”, xlim=c(50,100), col=”chocolate”, border=”brown”)

Figure 1: New cases trend

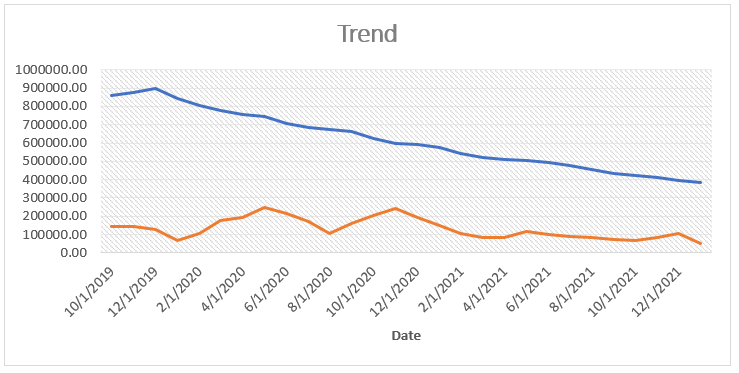

The trend of GDP based on the COVID-19

Plot (datasetuk$newCasesBySpecimenDate, datasetuk$GDP,datasetuk$date,main=”GDP trend”, xlim=c(50,100), col=”chocolate”, border=”brown”)

Figure 2: GDP trend

2.0 Theoretical thinking

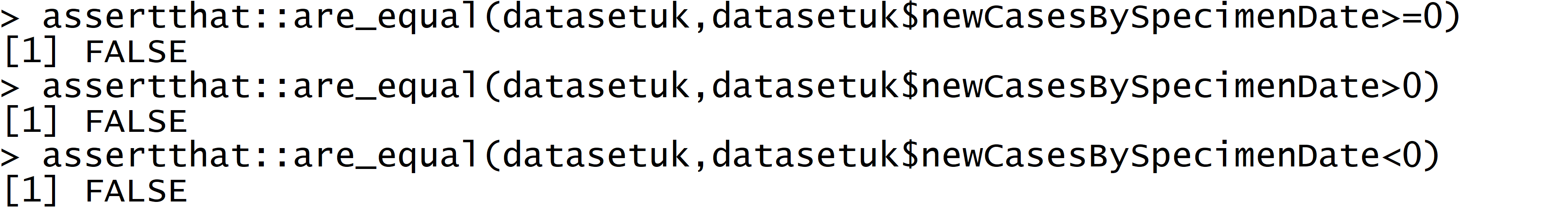

Machine learning relies heavily on the quality of the data. Errors are formed when bad data is used, which has a negative impact on the data interpretation and model performance. These mistakes are often difficult to spot and emerge late in the analytical process. Worse, mistakes may occasionally go unnoticed and end up in the data, resulting in erroneous conclusions. Validating data is the answer to this dilemma. Assertions are debugging tools that are used to explicitly examine data and test a condition. This method will show you how to use assertions in R to verify data. We’ll use the Assertr package in particular, which has several methods for verifying data assumptions set early in a data study.

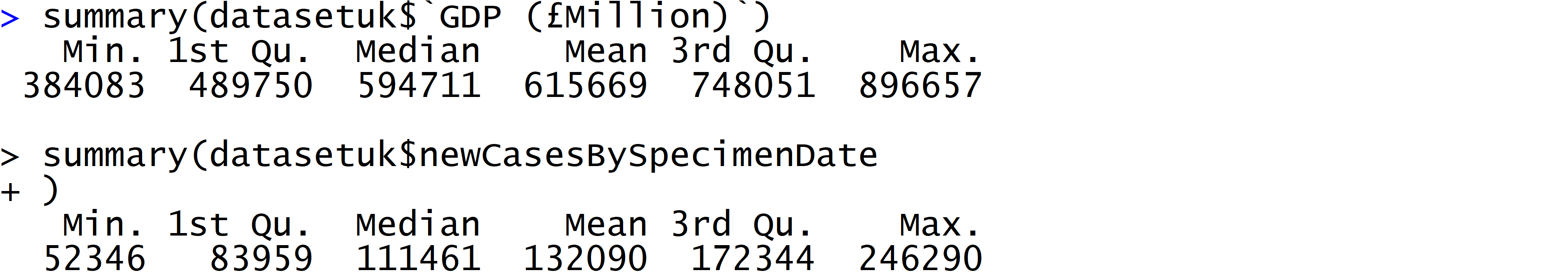

Using the Assert function such as summary, it will be simple to describe the descriptive statics of the data sample used. The result shows that some of the applicants’ ages are negative, which isn’t conceivable, as we can see. Even though this is wrong data, the group-by function was not able to identify it in the prior code. These kinds of mistakes may be caught with the Assertr verify () function. The verify function receives two arguments: a data frame (data) and a logic statement (GDP >= 0). An evaluation of the phrase is then carried out using the available data. In this case, verify generates an error notice and halts the pipeline’s operation until it can find a solution. In this case, the commands shown below will achieve this goal. The following was performed based on the dataset from the U.K.

Descriptive statistics

The statistics above indicate that GDP has a mean of M = 615669, with a maximum of max = 896657, and a minimum value of min = 384083. While, in the case of new cases the statistics M = 132090, with a minimum value of min = 52346, and max = 246290.

Validating data elements one at a time utilizing assertions may be time-consuming and wasteful. Using the assert function family and creating a chain of such instructions for verification, as illustrated in the example below, is a more efficient method. Let’s say we want to make sure our data meets the following criteria.

- The data contains all 10 variables listed in the guide’s first part. The verify (has all names()) statement in the code below does this.

- There are at least 120 observations in the dataset, which accounts for 20% of the total data. The verify ((nrow(.) > 120)) command is used to do this.

- The Age variable accepts only positive values. The verify (GDP > 0) command is used to do this.

- The income and loan amount variables should be within standard deviations of their informed assessment. They insist (within n sds (3), GDP) command in the code below does this.

- The approval status target variable only has the binary values zero and one. The assert (in set (0,1), approval status) instruction in the code below does this.

- Each data row has a maximum of six missing entries. The assert rows (num row NAs, within bounds (0,6), everything ()) command does this.

- The Date, newCasesBySpecimenDate, cumCases, and GDP (£Million) score variables combine to make each row unique. The assert rows (col concat, is uniq,) program does this.

Famous data visualization experts have influenced the evolution of data visualization throughout time. In statistics, William Playfair is credited with inventing graphical methods. William Playfair devised the line chart, the bar graph of economic statistics, the pie graph, and the circle graph. Joseph Priestly (2015). is credited with creating the earliest timeline charts, which displayed a person’s lifespan as a series of bars. Timelines have been around for more than 100 years, and Facebook didn’t create them.

Charles Minard’s illustration of Napoleon’s March is a well-known example of an early data visualization. An important part of the data visualization is information on the role that temperature had during Napoleon’s invasion of Russia, in addition to time scales. Six types of data are shown in two dimensions in the image: the number of Napoleon’s soldiers, distance, temp, longitude and latitude, movement direction, and location concerning particular dates.

Heavily involved in data visualization, Florence Nightingale was one of the first people to do so. She devised coxcomb charts to demonstrate the impact of disease on troop deaths (1858). The first person to use maps in infographics or spatial analytics was John Snow that was not from Game of Thrones. London’s 1854 pandemic was traced to a single public water pump based on a chart that mapped cholera deaths against public water pump sites.

3.0 Accessibility

The color displayed by the data in th visualized figure may sometimes have a negative impact on the accessibility of a visualization. Figure 4 illustrates a cluster analysis plot for study factors: rate of death, fertility rate, youth reliance, growing populations, and female life expectancy. They’ve been divided into geographical groups using a wide variety of colors from the main palette. The color scheme should be carefully considered to make sure that the data is as visible as possible, particularly for color-blind individuals. For color blind people, several of the colors in the visualized figure are indistinguishable, making the depiction worthless. When choosing colors, make sure they’re easily identifiable by different groups. The chart’s title and axes should represent the chart’s subject and variables, and changing the inclination of the x-axis text may avoid it from overlaying, resulting in a comprehensive and readable chart. To reduce visual clutter, I removed the minor grid lines, but I kept the gray dashed lines as the primary grid points from which users may deduce the dimensions of a point on a line as indicated in Figure 3 below.

library(ggplot2)

ggplot(New cases, GDP (£Milion) (x=New cases, y=GDP)) + geom_point(size=2, shape=23)

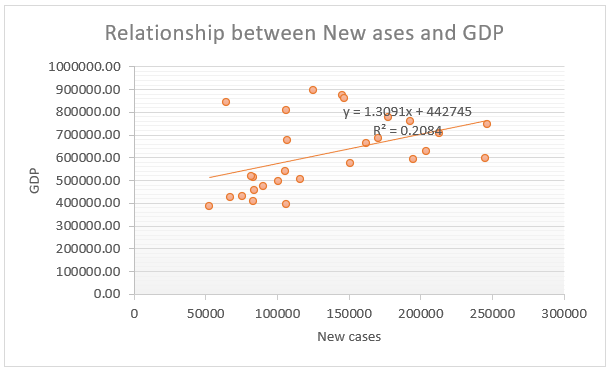

Figure 3: Scatterplot

Figure 3 above, indicated the relationship between the new cases of COVID 19 and the GDP. The results indicate that there is a positive relationship between the two variables (New cases and GDP) with the beta being 1.3091. the equation indicates an R-square of 0.2084 meaning that the pandemic accounted for 20.84% of the GDP decline in the United Kingdom.

4.0 Visualization choice

There will be more than one method to appropriately depict the data. Consider what you’re attempting to accomplish, the message you’re sending, who you’re trying to reach, and so on in this scenario. Remove everything that isn’t relevant to the plot. The beauty of visualization techniques is that it allows the designer to perform the hard work in terms of enhancing and communicating the narrative. Create a design that is easy to understand. Take a step back when you’ve finished your visualization and analyze what basic features may be added, altered, or eliminated to make the data simpler to grasp for the reader. You could want to add a linear trend to a line graph, or you might notice your pie chart has too many slices. These little modifications have a significant impact.

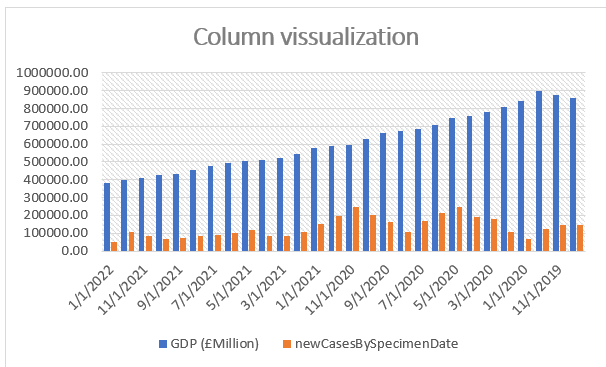

Figure 5: Column visualization

A bar chart employing the data to demonstrate how gross domestic product per capita (GDP) per capita has increased from 2019 to 2022 in various income levels would be highly difficult and inaccessible since users will not be able to view the changes in each income level directly and fast. Faceting divides the original information into numerous subsets and produces a huge number of extremely comparable charts rapidly (Wickham, 2020), thus I preferred to use the Gross domestic product (GDP) collected in the United Kingdom-based on their income levels and the new cases of COVID 19 recorded. Users may rapidly understand from the first component that GDP in elevated nations has been expanding at a rapid pace, but that there will be a sharp drop between 2019 and 2022.

5.0 Implications and Improvements

When doing research relating to human subjects, consent is a legal obligation. The Tri-Council Policy Paper: Ethical Responsibility for Research Involving Humans mandates that an individual’s permission must be provided willingly and that it may be revoked at any moment, along with their information or biological matter, if they choose to terminate their involvement. Instead of relying on age, regulations same to the Tri-Council Policy Paper: Ethical Conduct for Human Research recommend that consent be ascertained by an individual’s decision-making capacity, or their ability to understand relevant material displayed, and to appreciate the possible ramifications of any choice they make based on this information König & Winkler, (2020). Autonomy, or the ability to perceive and comprehend the pertinent facts offered about a study to make an educated voluntary choice, is a need for consent. Permission may be obtained in one of two ways when it comes to engaging children in research: the researchers may acquire approval from the minor if it has been determined that they also have the competence to make such an independent choice.

In today’s environment, the software business strives to provide consumers with real-world issues and applications with relevant, useable, and efficient solutions. To enhance and build a new product, application systems and software integrated hardware systems often experience developmental modifications and are introduced to numerous new functionalities. To demonstrate this, businesses use a variety of data visualization techniques to assist stakeholders, users, and other specialists in making informed choices. It’s unavoidable to figure out which visualization technique produces the best and most accurate results. Through graphical representation, data visualization has evolved into a technique of transforming raw data into meaningful information and knowledge König & Winkler, (2020). It is not unexpected that an efficient visualization tool, framework, or approach helps convey the precise grasp of data structures or patterns since it is frequently the main aim of a company to satisfy customer expectations with a high degree of satisfaction. This mostly affects a user’s decision-making abilities and the ability to persuade stakeholders König & Winkler, (2020). A little quantity of data is in the form of a vocal or written format, for example is beneficial. Significantly and when there’s a vast volume of data being created, interpreting it is challenging, and it is even more difficult on a wider scale.

In general, the graphical representation or portrayal must enable people to comprehend data in difficult settings in the same way as it does in ideal conditions. The visualization tool is a strong notion that illustrates complicated and challenging situations in a straightforward manner Hastie et al., (2020). Data is information in either an organized or unstructured form that is utilized to make choices or take actions depending on the needs of a specific situation. Having an abundance of data but no appropriate means for delivering it is deemed unresourceful and may contribute to a product’s failure.

Many sectors demand aesthetically engaging and easily comprehensible charts, graphs, or graphical representations of data to provide meaning to the data. Many industries have produced numerous products to make discoveries that surround us and our everyday activities during the last decade, where every system, software, and functioning of a machine is assessed based on verifiable findings. Many real-time applications rely on reliability and understandability to determine the system’s correctness and consistency. Understanding the requirement to assess or evaluate dependability ensures that such applications are efficient, function well, and are accurate. Furthermore, in the presentation program, the assessment for an appropriate visualization plays a critical part in persuading corporate stakeholders and demonstrating the system’s desired consequence, which is enhanced recognition Moraga, (2019). In-depth research of which data visualization techniques are used and what the results are in terms of system relevance should be conducted.

Improvement

To make clear improvements in the visualization used in this research, there will be more than two methods to appropriately depict the data. Since, the study focuses on attempting to accomplish, the message you’re sending, who you’re trying to reach, and so on in this scenario. To ensure that everything is accurately done, removal of everything that isn’t relevant to the plot will be done. Ensuring proper utilization of visualization techniques is that it allows the design to perform the hard work in terms of enhancing and communicating the narrative. Create a design that is easy to understand. The more significant step needs to be taken back when you’ve finished your visualization and analyze what basic features may be added, altered, or eliminated to make the data simpler to grasp for the reader Kuo et al., (2020). I will require you to add a linear trend to a line graph, or you might notice your pie chart has too many slices. These little will obtain modifications have a significant impact.

References

Gabry, J., Simpson, D., Vehtari, A., Betancourt, M., & Gelman, A. (2019). Visualization in Bayesian workflow. Journal of the Royal Statistical Society: Series A (Statistics in Society), 182(2), 389-402.

Goddard, T. D., Huang, C. C., Meng, E. C., Pettersen, E. F., Couch, G. S., Morris, J. H., & Ferrin, T. E. (2018). UCSF ChimeraX: Meeting modern challenges in visualization and analysis. Protein Science, 27(1), 14-25.

Hastie, C. E., Mackay, D. F., Ho, F., Celis-Morales, C. A., Katikireddi, S. V., Niedzwiedz, C. L., … & Pell, J. P. (2020). Vitamin D concentrations and COVID-19 infection in the UK Biobank. Diabetes & Metabolic Syndrome: Clinical Research & Reviews, 14(4), 561-565.

König, M., & Winkler, A. (2020). Monitoring in real-time: Cross-country evidence on the COVID-19 impact on GDP growth in the first half of 2020. Covid Economics, 57, 132-153.

Kuo, C. L., Pilling, L. C., Atkins, J. L., Masoli, J. A., Delgado, J., Kuchel, G. A., & Melzer, D. (2020). ApoE e4e4 genotype and mortality with COVID-19 in UK Biobank. The Journals of Gerontology: Series A, 75(9), 1801-1803.

Moraga, P. (2019). Geospatial health data: Modeling and visualization with R-INLA and shiny. Chapman and Hall/CRC.

write

write