ABSTRACT

Assessment literacy should form a core part of the undergraduate biomedical science curriculum if they are to negotiate a range of evaluation methodologies adequately. The present study aimed to further understand the subject of undergraduate assessment literacy as part of the biomedical science degree and took into account the following: undergraduate students’ comfort during assessments, their confidence in using their knowledge for real-life scenarios, their understanding of the set criteria for evaluation, the impact of feedback, the relevance of the evaluation, development of skills, and peer assessment and utilization of resources for critical assessment and building confidence in using their knowledge for academic success. One hundred undergraduates from different schools agreed to participate in the study by filling out a cross-sectional survey. This is evident by positive self-assessment in understanding assessment criteria and having confidence in applying what has been learned. However, issues on the examination of graded assignments and perceived importance of assessments signalled for development regarding the use of feedback and alignment of assessment with learning goals. The study aligns with other sources that assess the importance of the evaluation, learning objectives, feedback, and peer assessment in enhancing students’ assessment literacy. This kind of knowledge would provide a basis for teachers and curriculum developers to introduce better assessment approaches and further strengthen the learning success of their students. They would also contribute to the ongoing discourse surrounding assessment literacy in education on biomedical science.

CHAPTER ONE

INTRODUCTION

1.1 Background of Assessment Literacy in Biomedical Sciences

In biomedical science, assessment literacy is related to a group of competencies that are important for success in the biomedical field and include the ability to critically evaluate scientific evidence so that they can report results and make valuable judgments on research outcomes. Appreciating the challenges and requirements of these particular undergraduate biomedical science students to assist them in developing assessment literacy has implications for the specific characteristics that biomedical science degrees require of their students. However, other basic demographic factors such as gender, age, and ethnicity must affect students’ experiences with the process and the outcomes they receive from it.

1.2 Definition and Significance of Assessment Literacy in Biomedical Science

Assessment literacy is a set of competencies required from educators and students in biomedical education. These include the knowledge of a few assessment strategies and methods applied to measure successful learning and practice in biomedical disciplines. Assessment literacy is the ability to operate proficiently and understand the various modes of assessment: standard tests, laboratory-based assessments, and research projects. It is the capability to reflect and critically self-evaluate, applying the assessment feedback to improve the learning outcome, not just about proficiency in the assessment technologies.

Assessment literacy is, therefore, a component of education to be addressed; the idea should be nurtured to produce proficient health practitioners (Academy of Science of South Africa, 2018). Indeed, for the biomedical scientist or the healthcare practitioner, assessment literacy is the foundation of the practice exercise in the evidence-based appraisal of diagnostic findings, research information, and treatment results. In the very fast and complex healthcare industry, knowledge-based decisions and the high quality of the health professional treatment of the patient require the critical evaluation of the assessment results. It supports life-long learning and professional development, helping the individual to adapt to new technologies, research methods, and healthcare perspectives.

1.3 Gap in Current Literature Regarding Factors Driving Assessment Literacy

Although assessment literacy is one of the most important aspects of biomedical science education, more research needs to be carried out on what exactly motivates the student to be a good, if not excellent, test-taker. Despite this, as Regmi and Jones (2020) pointed out, whereas sufficient literature explored the topic of assessment literacy in general educational settings, only a few sought to highlight the determinants highly specific to the domain of biological science. Such findings can inform the development of targeted interventions and pedagogical approaches that may be fruitful for enhancing the assessment literacy of such students.

1.4 Purpose of the Study and Its Relevance to the Field of Biomedical Science

The gap in the literature is addressed in this study by researching what underpins assessment literacy in biomedical science students. Thus, by the nature of the analysis, this study is poised to bring the main contributors and affecting factors regarding assessment literacy. The findings from this study will provide salient information with which educational practices can be fine-tuned to enhance students’ performance in this crucial area of learning. The research work based on those aims to assist in furthering the improvement of biomedical science education by identifying and meeting challenges teachers face in this field (Mate & Weidenhofer, 2022).

The biomedical importance of this study cannot be overemphasized because of the expected wide changes that may touch bases in the methods of teaching and course content. Teachers, curriculum developers, and policymakers need to have extensive knowledge of the realms contributing to assessment literacy to provide relevant interventions and instructional practices that could equip students with the competence to perform effectively in the area under consideration or elsewhere where the competence may be transferred. With the competency of evaluation literacy, students can confidently and effectively develop their competence in biomedicine and healthcare (Socha-Dietrich, 2021).

1.5 Research Questions

In pursuit of understanding the dynamics of assessment literacy in biomedical science education, this Study addresses the following research questions:

- How can assessment literacy be optimally integrated into life science curricula to facilitate students’ future professional success?

- What factors motivate students in biomedical science to develop assessment literacy skills?

1.6 Hypothesis

Following this literature review and the arguments made about the significance of assessment literacy for educational practice within biomedical science, it might be hypothesized as follows:

- High levels of assessment literacy are thus postulated in biomedical science students to result in a better and more critical evaluation and reflection that will enhance self-regulated learning toward professional development.

- Besides, effective implementation of assessment literacy in life science curricula would benefit students in terms of academic performance and form another positive factor for student career building in the future.

1.7 Rationale for the Study

However, it is widely argued that the level of assessment literacy is one significant determinant of improved student achievement in biomedical science education. The body of research on this area remains minimal in the present studies, focusing on assessment literacy in general education but hardly taking heed of the context and issues confronting biomedical science students (Kormos & Smith, 2023). This article addresses this gap with in-depth studies of factors that drive assessment literacy among biomedical science students and their implications for teaching and learning practices in this field. As educators and policymakers learn how to adjust the targeted assessments to these needs and challenges, assessment literacy can be increased by realizing appropriate pedagogical interventions and improving student outcomes in a critical study area like this.

1.8 Relevant Studies on Assessment Literacy in Higher Education

Assessment literacy in higher education has long been an issue that has engendered extensive research into its nature and implications. Zhu and Evans (2022) investigated the psychometric properties of the assessment literacy instrument for faculty and students. Joachim et al. (2020) have looked into the problem of science education assessment literacy, concentrating on this very kind when checking students’ conceptual understanding and scientific language use. This fact was confirmed by Tanaka et al. (2020), who conclude that assessment requires the formation of skills in searching and evaluating online health resources among undergraduate nursing students.

1.9 Summary of Key Findings and Identification of Gaps or Limitations in Existing Research

Assessment literacy research has yielded numerous insights, yet gaps and limits remain. The lack of attention on biomedical science, where assessment practices may differ from other sectors, is a problem (Biwer, 2020). Although several studies have examined students’ perceptions of assessment processes, few have examined the elements that drive assessment literacy in biomedical science programs. This vacuum in the literature allows for more research on assessment literacy in biomedical science education and its unique difficulties and potential.

CHAPTER TWO

2.0 METHODOLOGY

2.1 Experimental Design

This research design will be experimental, gathering data from the specified population using the cross-sectional survey method. Such a technique is designed to offer a short snapshot of a general situation for how students in biomedicine science, human bioscience, and pharmacology are currently perceiving and using these tools for evaluation. In this form, the cross-section observes assessment literacy in academic subjects at one point without having the follow-up procedure for the participants necessary, which could be the case with some other questions than those referring to changes in time. The method secures high efficiency in acquiring insight regarding assessment literacy within the indicated academic subjects, as it allows for a fast gathering of information without the necessity to invest many resources in studying data, as is the case with longitudinal studies.

2.2 Sample Population

The sample population of 41 undergraduate students pursuing courses in Biomedical Science, Pharmacology, and Human Bioscience from some universities covered forms the basis of this research project. Target population difference is perfect to allow the Study to be all-inclusive, ensuring the Study is complete while accommodating learners with various approaches to learning and skills in the biomedical field. The Study will also bring on board students from all years of their studies, which are the first, second, and third years, so that we get an all-rounded representation of development in assessment literacy within the undergraduate program.

It will require diverse years of student involvement intentionally across the undergraduate journey to understand comprehensive trends in assessment literacy and barriers (Hensel, 2023). Besides, this approach could eliminate the likely bias due to the inclusion of some students in subgroups and contribute to the generalization and appropriateness of the results. An all-inclusive approach in this sampling by Johnson et al. (2020) fairly and safely justifies undergraduate students’ assessment literacy in biomedical science, pharmacology, and human bioscience programs.

2.3 Survey Development

The tool is designed very carefully in such a way that it can assess the proficiency of students within different domains. This tool involves multiple-choice questions (MCQs) that will test understanding assessment techniques, technical terms, and industry standards. The survey also incorporates both close- and open-ended questions using Likert scales, which further assess the immeasurable aspect of assessment literacy, such as self-confidence and perceived competencies. This is supported by Webb-Williams (2018), who posits that it complements the assessment of objective knowledge since it helps learners know their evaluative literacy competencies.

2.4 Data Collection

In that context, data collection would be made easier by the educational portal Aula, which most undergraduate students have an account for. The participants should be briefed on the aims of the study before the administration of the survey to keep clear lines of transparency and expectation (Campbell et al., 2018). To ensure privacy for the participants, the study would exclude personally identifying information from the responses. The study would ensure data safety from the access of other intruders by ensuring that the results of the surveys are kept in an encrypted system to which only a few selected members of the research group have access.

2.5 Data Analysis

The data was represented by bar and pie charts showing the participants’ survey responses. These included the levels of understanding about using assessment criteria, confidence in applying skills and knowledge, and influence on skill development. The sample was also presented by gender distribution and ethnic makeup using pie graphs. Statistical test comparators following these differences include differences in confidence or influence that the feedback had between demographics on skill development. These statistical tests enable an understanding of whether these differences were significant and contributed to the analysis’s robustness and validity.

2.6 Health and Safety Concerns

However, the online nature of the survey does provide a reduction to the physical health and safety risks of the participants in comparison to traditional mediums of data collection; assurances need to be provided to the respondents with reference to their psychological safety. To put fear about privacy and confidentiality to rest, we assure you that none of the replies to the survey will be attributable. This will ensure that the participants are free and comfortably provide their views without fear of victimization (Blanchard & Farber, 2020). In addition, one can choose his or her level of participation in the poll and is at liberty to skip items one feels uncomfortable with, thus making the survey more friendly. This enhances the experience of the survey because it gives the participant the agency in their level of participation.

Other steps, such as access to supportive services, are put in place to ensure that the psychological well-being of the participants is supported during the survey processes. At the end of the survey, respondents receive information about counseling and hotlines by which they can easily access emotional help resources. In this regard, the provision of such needed supportive services and assurance that any possible psychological problems of participants are being dealt with properly shows how the research team is caring and wants to see the Study being carried on in an environment that will be supportive of the collection of data.

2.7 Limitations

The major risk that emanates from this sort of data is self-reporting data, itself predisposing the current study to the risk of response and social desirability, among other biases, thereby decreasing its reliability. Responding to what he popularly wants or what society likes, participants may need to give accurate responses. People may answer questions according to how they think it is put, not according to their true beliefs and experiences—this response bias. Ross and Bibler Zaidi (2019) further assert that such tends to undermine the reliability and accuracy of the results, hence impacting the study’s conclusion validity.

First, the cross-sectional nature of the study means that it would not be possible to strengthen the inferences from the relationships observed, given that the data were collected only at a single point in time. Afterward, one can never establish the temporal sequence of events and the causal linkages between these variables. Subsequent researchers will extend this research further through the use of longitudinal designs and further interpretations.

CHAPTER THREE

3.0 RESULTS AND FINDINGS

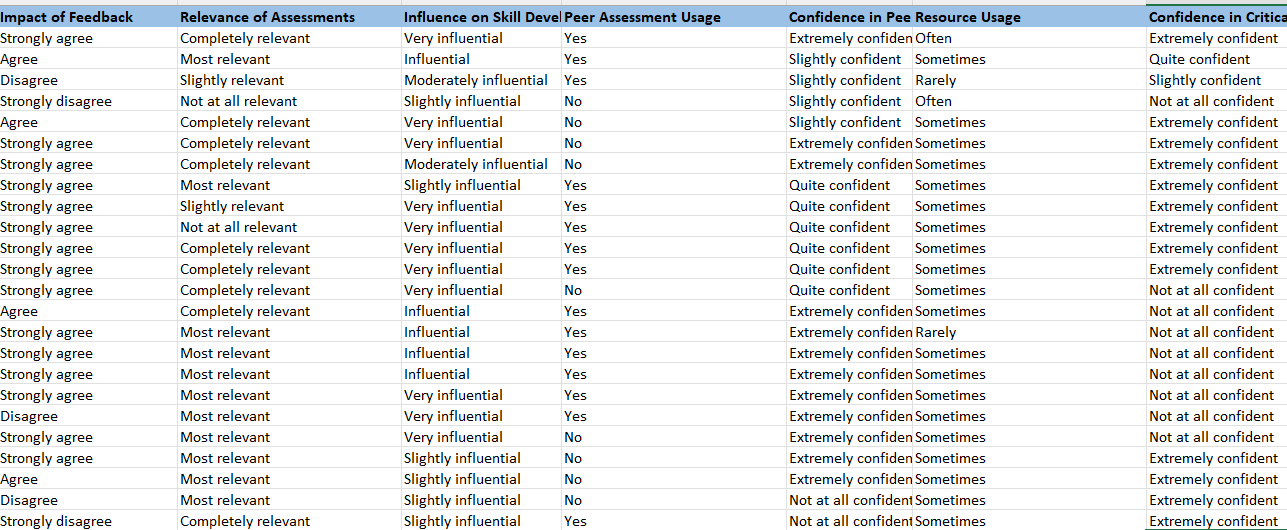

This section presents the results of the survey obtained in the pharmacology, human bioscience, and biomedical science courses for undergraduates. Generally, in this data analysis, the assessment critically looks at the underlying variables with a focus on the effects on assessment literacy among students in biomedical science. Among these major themes are students’ understanding of the assessment criteria, use of feedback, confidence in applying what has been learned, and relevance of the assessment. Through this analysis, the research questions will have been answered, and the theories, as presented in Chapter 1, will have been tested. Lastly, in the current chapter, the factors of assessment literacy are discussed in terms of how it has a bearing on the teaching of biomedical science and the curriculum.

3.1 Demographic Effects

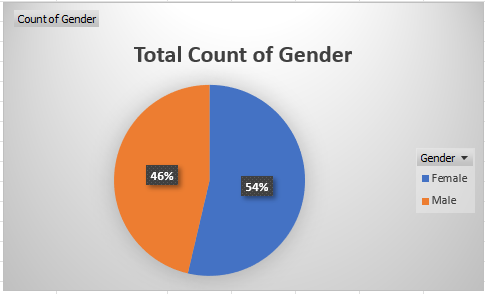

The pie chart below shows the gender distribution among the subjects interviewed for the biomedical science cohort. According to it, female students constitute about 53% of the subjects interviewed, numbering 22 people, while the remaining 47%, that is 19 individuals, represent male students. This reveals a somewhat balanced presence in line with the subjects, considering there are slightly more female students than males. The pie chart below clearly shows the composition of the student population in biomedical science based on gender. Knowledge of gender statistics of the number of students has to be accompanied by promoting gender equity and inclusivity in biomedical science education. This has to ensure that each student gets an environment supporting their equal needs and perspectives.

3.2 Understanding Assessment Literacy

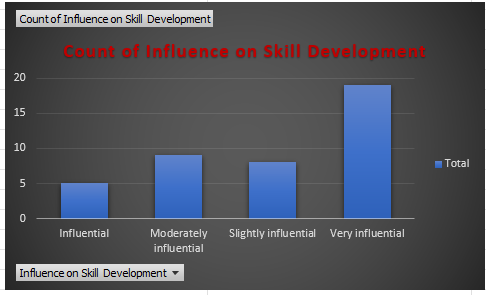

The table below probes into the influence effect of the various factors that could foster skill development among respondents in the biomedical science group. In response to this effect, most students believe that the factors assessed could have an influencing power or would be very influential in determining their skill development. Nineteen respondents (representing about 48% of the studied population) indicate that they are very influential. This signifies a major influence of the mentioned factors on the development of skills relevant to the education and practice of biomedical science. On the other hand, nine respondents (approximately 23%) see them as moderately influential, while 5 (or about 13%) believe they are significant. A small portion of respondents also assess such factors as slight, with 8 (or about 20%). The following bar plot will show the relative weightage of influence that these factors seem to command in developing their skills, as perceived by the biomedical science students. This requires their incisive handling in curricular and educational support efforts.

3.3 Understanding of Assessment Criteria

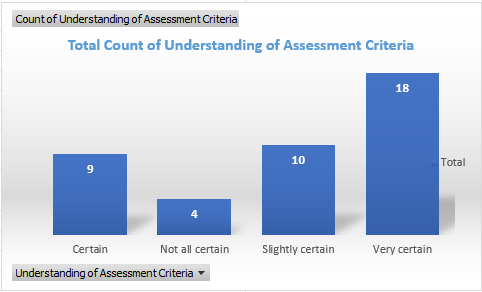

The bar plot below shows the distribution of the participants’ perceived understanding of the criteria for assessment under four different categories. Furthermore, a majority of the participants (18) acknowledged high confidence, hence self-categorizing as “Very certain” while rating their understanding of the criteria for assessment. The claim of certainty made by a great percentage (9) of the participants increases the overall trend in confidence in terms of an understanding of assessment criteria by the surveyed individuals. The flip side of these results is that fewer participants were at the “Slightly certain” and “Not all certain,” with counts of 10 and 4, respectively. As described by the bar plot, the distribution references a generally optimistic trend of perceived understanding of the assessment criteria amongst the participants in biomedical sciences education. This is very crucial for effective literacy development among students.

3.4 T-Test on Confident in Being Able to Apply Knowledge and Understanding

The output of the paired t-test shows that these two variables differ: confidence in critical assessment and perceived influence on skill development. The t Statistic value of -4.068 and p-value (two-tailed) of 0.00021629 indicate that it was unlikely to have occurred randomly. The present finding is pertinent to the focus of the present study on assessment literacy in biomedical science education, concerning previous evidence showing that students who report higher levels of confidence in their abilities for critical assessment may perceive a lesser influence on their skill development.

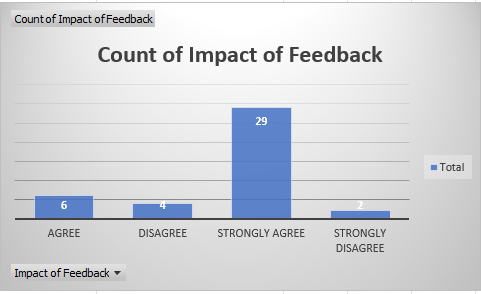

3.5 Impact of Feedback on skill development

The impression of the participants regarding the influence of the feedback is presented in the bar plot below on four levels. Interestingly, 29 respondents out of 60 strongly agreed that their academic progress was under the influence of the feedback. The fact that there are 6 participants who “just” agree further supports the global tendency for a positive impact of the input. However, it is not so emphatic among people who strongly agree. In contrast, only four respondents disagreed with the statement relating to the influence of feedback and strongly disagreed with two participants. However, observations about such dissenting opinions could be made within the sample student cohort surveyed; this behaviour is still in the minority compared to those students who either agree or strongly agree. The distribution represented in this bar plot bears testament to an extremely deterministic impact of feedback on how students experience their learning and which academic outcome they shape within biomedical science education.

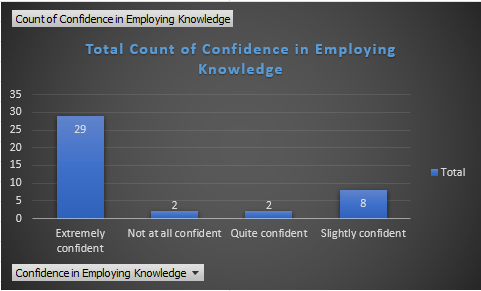

3.6 Confidence in Employing Knowledge

The graph below indicates the participants’ confidence in biomedical science. Among 29 respondents, most were very confident in applying their information to a large extent. It shows that students are satisfied with applying coursework information well. Just two people were unsure, showing that students from the surveyed cohort believe they can use their information very well. Only two respondents reported being pretty confident, indicating that very few responses fell on the lower side of rather sure. Lower confidence levels are not often reported among the cohort; most students say they have extremely high confidence levels. Educators must understand what influences the degree to which students can apply information to build self-efficacy and enhance the learning environment.

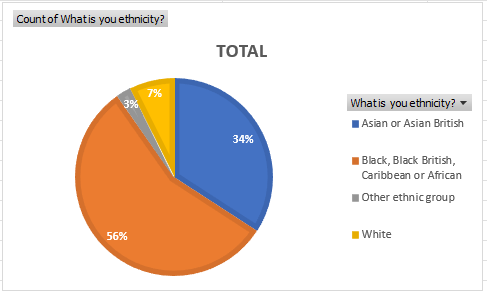

3.6 Ethnicity

The following pie chart graphically represents how the biomedical science sample was made up regarding ethnicity. Of those included in the returned sample, 23 identified as Black, Black British, Caribbean, or African. Even more so than the groups mentioned before, this group is considered even larger due to the portion in the study representing only 56% of the total. This is also an indication of sizeable representation within this cohort. Similarly, the data indicate that three were from White ethnic backgrounds, and one participant was from another ethnic group. Even though these two groups are more generally small portions of the sample, their inclusion points out that there is diversity in the biomedical science field that should be recognized and honoured.

CHAPTER FOUR

4.0 DISCUSSION AND CONCLUSION

This study was purposed to present the assessment literacy levels of students in biomedical science and how their demographics, assessment methods and feedback influenced this field of practice. Students reported different levels of understanding about assessment criteria, while some were unsure of it. Similarly, the level of confidence associated with applying knowledge during assessments was reported differently, describing individual differences among them. Demographic variables such as gender, age, and ethnicity varied directly with assessment literacy, wherein each pattern appeared to differ between the groups. Common forms of assessment emerged as a problem for completion. In contrast, assessment difficulty was also challenging for students who had issues starting an assignment and understanding what was being asked for. The value of feedback was seen to affect student learning, but the students varied in their perceived usefulness. These results underscore the need for tailored interventions to enhance experiences and outcomes of assessments within biomedical science education.

4.1 Comparison with Existing Literature

The results of the current study are compared to existing literature on assessment literacy in the biomedical sciences and allied fields, and some similarities and differences are noted. Like earlier studies, this paper emphasizes the importance of assessing literacy for students pursuing biomedical sciences about successful learning or academic performance (Morales‐Doyle, 2017; Öhrstedt & Lindfors, 2019). Though earlier studies indicated that students approached proficiency with their knowledge to some extent when they conducted assessment work, this study paints a grey and not black-and-white picture where varying degrees of confidence among students in knowledge use for assessments are available in the literature (Chen et al., 2018). Remarkably, based on demographic differences among gender, age, and ethnic groups, the results made invaluable contributions to the general discourses on the impacts of any demographic factor on assessment experiences and their outcomes (Howard, 2019).

Recent trends in the literature increasingly push towards a more comprehensive approach regarding assessment literacy, which may even include broader competencies based on critical thinking or metacognition skills (Miles et al., 2020; Cotter & Reisdorf, 2020). The understanding of criteria for assessment was impacting their confidence in the deployment of knowledge, and these impacts were clearer within some subgroups of student demographics. Recognition and countering these complexities would render the educator the most desired support for literacy among learners in an assessment, thus informing an exigent and rewarding learning experience and academic achievement in biomedical science education.

4.2 Understanding of Assessment Criteria

The reflection of our analysis upon conceptions reported by participants reverberated with some established findings reported above and in previous research on the multifaceted nature of assessment literacy in educational settings (Levi & Inbar-Lourie, 2020; Coombe et al., 2020). Saliu-Abdulahi et al. (2017) confirmed that clear communication and an explicit explanation of criteria ensure better understanding. The scaffolding for students’ comprehension is important over assessment and fosters self-regulatory skills that ride parallel to challenges identified in the present research by Andrade and Heritage (2017).

The study’s findings resonated with literature that finds an interrelationship between assessment literacy and student learning outcomes (Scherer & Siddiq, 2019). More particularly, it was only from this study that the disagreement of the teachers with assessment criteria hurt the interrelationship that scholarly literature had determined regarding the importance of aligning assessment criteria for student engagement and achievement. The paper delves further into strategies for broadening students’ knowledge of assessment criteria from evidenced best practices (Faltis & Valdés, 2016).

4.3 Confidence in Employing Knowledge

Participants have varying confidence levels to apply the knowledge in the assessment tasks. While some are highly confident, others seemingly do not have confidence. Self-efficacy strongly influences learning behaviours and outcomes such as achievement (Burić & Kim, 2020). Generally, a high level of confidence is associated with increased motivation and persistence among the learners who demonstrate improved academic performance (Tinto, 2017). As a result, low self-confidence may generate excessive worry, a lack of trust in abilities and a tendency to avoid completing assessment tasks, thus breaking the learning and performance process. This may even help the teachers motivate clear guidance and feedback to students while maintaining a supportive learning atmosphere and promoting self-regulatory abilities for the students (Ambreen et al., 2016).

4.4 Demographic Effects

As such, demographic factors can provide critical consideration in the influence of gender, age, and ethnicity on assessment literacy among biomedical science students. It is possible to chart differences in perceptions and engagement with assessments by students belonging to different demographic categories, hence bringing out the divergences or trends. For instance, gender may be implicated in levels of confidence and attitudes towards the assessment of tasks, while the factor of age takes into account maturational and self-regulatory capabilities. Ethnicity might work with other attributes, such as cultural backgrounds and socio-economic determinants, to contribute to how students approach assessments and view their academic experiences (Kotok, 2017; Van Zyl, 2016; Kahu & Nelson, 2018). So, such findings have called for the formulation of programs dealing with biomedical science to be done with an equity and inclusion focus to fill the gaps arising from assessment literacy. Given this, they must be adopted as a whole in holistic assessment practices that aim to meet the students’ variable needs and varied backgrounds if this is the goal of such an environment that promotes respect for diversity and equal opportunities.

4.5 Assessment Methods as a Barrier

Consequently, barriers identified by the students are common problems of procrastination, time management, and difficulty deciphering an assessment approach; they can have an especially severe impact on learning outcomes. For example, procrastination may lead to hasty submissions and low scores on that assessment, while understanding clearly the assessment criteria evidently will affect the quality of the response. The steps in overcoming these difficulties are utilising appropriate assessment guidelines and support mechanisms and arranging workshops on study skills and time management. Where these measures are in place, they facilitate learning in biomedical science, thus contributing to success and well-being.

4.6 Role of Feedback

It was essential to identify the respondent’s views on the feedback provided and how that information may influence better learning of students. The importance of timely and constructive feedback lies in ensuring that it provides detailed student development support to offer him or her information regarding strengths and areas that need improvement in the student. However, this study underscores the necessity to improve the provision of feedback so that it can most effectively impact student learning. This could be clearer, more specific, and actionable feedback, the use of technology to offer additional support for learners to assimilate that feedback personally, or a culture of incremental improvement in feedback between students and teachers. Addressing these features would allow better optimization of the role of feedback in biomedical science education to attain better learning outputs and student success.

4.7 Strengths and Limitations

Reflecting upon the strengths and limitations of this research methodology and its findings will reveal critical insights toward the goal of fully comprehending research outcomes. The strong point of the study is related to biomedical science education. With a survey-based approach, the findings of this study could be generalizable in other or equivalent educational settings, provided that enough sample numbers are involved for this research. The limitation of this procedure is based on self-reported information subject to a series of response biases and social desirability effects (Burke & Carman, 2017). The baseline assessment is also cross-sectional by design, hence the inability to generalize and attribute causal relationships between variables.

One should also consider possible biases or confounding factors, such as the participant’s experience relative to prior assessments and feedback acquired, which might impact the results. Other analyses might consider differences in educational backgrounds or subject preferences that influenced their perception of assessment literacy and feedback effectiveness. This may include using a mixed-methods approach that can help triangulate the findings or longitudinal studies tracking changes in assessment literacy over time. In light of this, participants could be further stratified relative to diversity of gender, age group, and ethnicity, which would identify subgroup differences for intervention tailoring.

Furthermore, this research paves the way for future studies investigating the impact of certain interventions to enhance assessment literacy and feedback practices in biomedical science education. Further work on the role of digital technologies, online platforms towards assessment or automated systems towards the feedback augmentation on student engagement and learning outcomes could open even more as-yet-undiscovered insights. Such information is very helpful from the perspective of longitudinal studies for change in the assessment literacy over a student’s personal development (Gotch & McLean, 2019).

4.8 Conclusion

The study has added substantial value in the domain of assessment literacy in biomedical science education by reporting different factors associated with students’ understanding, confidence, and experience regarding assessment methods and feedback. Besides, the results indicate the benefits of a culture of assessment literacy among students who can enhance their academic performance and better understand several biomedically relevant topics. Therefore, the present study that discussed the perceptions and experiences of the participants unveiled the complex nature of assessment literacy and called for focused remedies for the problems and encumbrances students face.

Assessment literacy lies central in orienting students well to the process of effective seeking through tasks of assessment and confidently applying their knowledge to interpret constructive feedback. Hence, literacy skills in assessment are best developed in biomedical sciences curricula through educator- and policymaker-directed strategies that inculcate depth of understanding of the criteria for assessment, increase confidence in the applicability of knowledge, and assure the enhancement of the feedback mechanism. The knowledge of demographic factors influencing assessment literacy only emphasizes and builds on its importance in developing inclusion, assurance, and equity in various student’ populations’ assessments.

4.9 Recommendations

With this evidence base, educators, policymakers, and stakeholders should take an approach to ensure students improve their assessment literacy in biomedical science education. For instance, explain criteria through explicit instruction; of necessity, teachers should eliminate vague and ambiguous words and provide clear guidelines about what interpretation is given and how to use those guides. To ensure that a clearer understanding of the requirements inside the assessment structure is reached, there could be certain aspects of assessment literacy that are reflected in course materials and, in fact, across workshops and tutorials.

Furthermore, there is a requirement for creating an effective environment for feedback so that students can improve the learning process. Timely and actionable feedback for students by educators would be very helpful in identifying the gaps in their knowledge and areas that most need to be developed. Technology platforms provide the ability to deliver feedback more expansively and efficiently. These help enhance the accessibility and interactivity of students with feedback.

The organizational frameworks and accreditation standards can be rated to incorporate assessment literacy competencies. All these are then embedded in program outcomes and learning objectives as among the requirements for just any module, thus attracting much more significance for qualifications. This leads to the establishment of assessment literacy standards, exposure of educators to more preparation experiences, and significant funding of the assessment literacy agenda.

References

Academy of Science of South Africa. (2018). Reconceptualizing health professions education in South Africa.

Ambreen, M., Haqdad, A., & Saleem, W. A. (2016). Fostering self-regulated learning through distance education: A case study of M. Phil secondary teacher education program of Allama Iqbal Open University. Turkish Online Journal of Distance Education, 17(3).

Andrade, H. L., & Heritage, M. (2017). Using formative assessment to enhance learning, achievement, and academic self-regulation. Routledge.

Biwer, F. (2020). Fostering Effective Learning Strategies in Higher Education – A Mixed-Methods Study. Journal of Applied Research in Memory and Cognition, 9(2), 186–203. https://doi.org/10.1016/j.jarmac.2020.03.004

Blanchard, M., & Farber, B. A. (2020). “It is never okay to talk about suicide”: patients’ reasons for concealing suicidal ideation in psychotherapy. Psychotherapy Research, 30(1), 124-136.

Burić, I., & Kim, L. E. (2020). Teacher self-efficacy, instructional quality, and student motivational beliefs: An analysis using multilevel structural equation modeling. Learning and Instruction, 66, 101302.

Burke, M. A., & Carman, K. G. (2017). You can be too thin (but not too tall): Social desirability bias in self-reports of weight and height. Economics & Human Biology, 27, 198-222.

Campbell, S., Greenwood, M., Prior, S., Shearer, T., Walkem, K., Young, S., … & Walker, K. (2020). Purposive sampling: complex or simple? Research case examples. Journal of research in Nursing, 25(8), 652-661.

Chen, K. S., Monrouxe, L., Lu, Y. H., Jenq, C. C., Chang, Y. J., Chang, Y. C., & Chai, P. Y. C. (2018). Academic outcomes of flipped classroom learning: a meta‐analysis. Medical education, 52(9), 910-924.

Coombe, C., Vafadar, H., & Mohebbi, H. (2020). Language assessment literacy: What do we need to learn, unlearn, and relearn?. Language Testing in Asia, 10, 1-16.

Cotter, K., & Reisdorf, B. C. (2020). Algorithmic knowledge gaps: A new horizon of (digital) inequality. International Journal of Communication, 14, 21.

Faltis, C. J., & Valdés, G. (2016). Preparing teachers for teaching in and advocating for linguistically diverse classrooms: A vade mecum for teacher educators. Handbook of research on teaching, 549-592.

Gotch, C. M., & McLean, C. (2019). Teacher outcomes from a statewide initiative to build assessment literacy. Studies in Educational Evaluation, 62, 30-36.

Hensel, N. H. (Ed.). (2023). Course-based undergraduate research: Educational equity and high-impact practice. Taylor & Francis.

Howard, T. C. (2019). Why race and culture matter in schools: Closing the achievement gap in America’s classrooms. Teachers College Press.

https://bmcmedethics.biomedcentral.com/articles/10.1186/s12910-020-00538-7

Joachim, C., Hammann, M., Carstensen, C. H., & Bögeholz, S. (2020). Modeling and Measuring Pre-Service Teachers’ Assessment Literacy Regarding Experimentation Competences in Biology. Education Sciences, 10(5), 140. https://doi.org/10.3390/educsci10050140

Johnson, J. L., Adkins, D., & Chauvin, S. (2020). A review of the quality indicators of rigor in qualitative research. American journal of pharmaceutical education, 84(1), 7120.

Kahu, E. R., & Nelson, K. (2018). Student engagement in the educational interface: Understanding the mechanisms of student success. Higher education research & development, 37(1), 58-71.

Kormos, J., & Smith, A. M. (2023). Teaching languages to students with specific learning differences (Vol. 18). Channel View Publications.

Kotok, S. (2017). Unfulfilled potential: High-achieving minority students and the high school achievement gap in math. High School Journal, 100(3), 183-202.

Lehane, E., Leahy-Warren, P., O’Riordan, C., Savage, E., Drennan, J., O’Tuathaigh, C., … & Hegarty, J. (2018). Evidence-based practice education for healthcare professions: an expert view. BMJ evidence-based medicine.

Levi, T., & Inbar-Lourie, O. (2020). Assessment literacy or language assessment literacy: Learning from the teachers. Language Assessment Quarterly, 17(2), 168-182.

Mate, K., & Weidenhofer, J. (2022). Considerations and strategies for effective online assessment with a focus on the biomedical sciences. Faseb Bioadvances, 4(1), 9.

Miles, R., Rabin, L., Krishnan, A., Grandoit, E., & Kloskowski, K. (2020). Mental health literacy in a diverse sample of undergraduate students: demographic, psychological, and academic correlates. BMC public health, 20(1), 1-13.

Morales‐Doyle, D. (2017). Justice‐centered science pedagogy: A catalyst for academic achievement and social transformation. Science Education, 101(6), 1034-1060.

Öhrstedt, M., & Lindfors, P. (2019). First-semester students’ capacity to predict academic achievement as related to approaches to learning. Journal of Further and Higher Education, 43(10), 1420-1432.

Regmi, K., & Jones, L. (2020). A systematic review of the factors–enablers and barriers–affecting e-learning in health sciences education. BMC medical education, 20(1), 1-18.

Ross, P. T., & Bibler Zaidi, N. L. (2019). Limited by our limitations. Perspectives on medical education, 8, 261-264.

Saliu-Abdulahi, D., Hellekjær, G. O., & Hertzberg, F. (2017). Teachers’(formative) feedback practices in EFL writing classes in Norway. Journal of Response to Writing, 3(1), 3.

Scherer, R., & Siddiq, F. (2019). The relation between students’ socioeconomic status and ICT literacy: Findings from a meta-analysis. Computers & Education, 138, 13-32.

Socha-Dietrich, K. (2021). Empowering the health workforce to make the most of the digital revolution.

Tanaka, J., Kuroda, H., Igawa, N., Sakurai, T., & Ohnishi, M. (2020). Perceived eHealth Literacy and Learning Experiences Among Japanese Undergraduate Nursing Students. CIN: Computers, Informatics, Nursing, 1. https://doi.org/10.1097/cin.0000000000000611

Tinto, V. (2017). Reflections on student persistence. Student Success, 8(2), 1-8.

Van Zyl, A. (2016). The contours of inequality: The links between socio-economic status of students and other variables at the University of Johannesburg. Journal of Student Affairs in Africa, 4(1).

Webb-Williams, J. (2018). Science self-efficacy in the primary classroom: Using mixed methods to investigate sources of self-efficacy. Research in Science Education, 48(5), 939-961.

Zhu, X., & Evans, C. (2022). Enhancing the development and understanding of assessment literacy in higher education. European Journal of Higher Education, 1–21. https://doi.org/10.1080/21568235.2022.2118149

write

write