Introduction

Matrix can be found anywhere. According to Wachowski & Wachowski (1999), their argument explains that matrix is all around us. Virtual reality (VR) is a constantly expanding field that surrounds us today, and these famous words from the movie The Matrix aptly encapsulate it. Virtual reality has come a long way in recent years, from rudimentary stereoscopic 3D viewers to completely immersive multi-sensory experiences, despite formerly being limited to science fiction. MIT developed the first mechanical flight simulator in the 1950s, which is when virtual reality initially emerged (Sutherland, 1965). After that, significant advancements have included creating haptic feedback devices, head-mounted displays, and augmented reality systems—which superimpose digital data on the real world (Burdea & Coiffet, 2003). Virtual reality (VR) is widely used for various purposes, including gaming, virtual tours, medical rehabilitation, and military training. It is made possible by consumer devices like the Oculus Rift and HTC Vive. However, Motion sickness, user experience, and expensive equipment continue to present serious obstacles. This essay contends that virtual reality, despite its drawbacks, represents a revolutionary development in the interaction of human computers and will increase in the next ten years in both the consumer and enterprise realms. With the growing shift of our life and work to virtual spaces, the COVID-19 epidemic has only served to accelerate this trend.

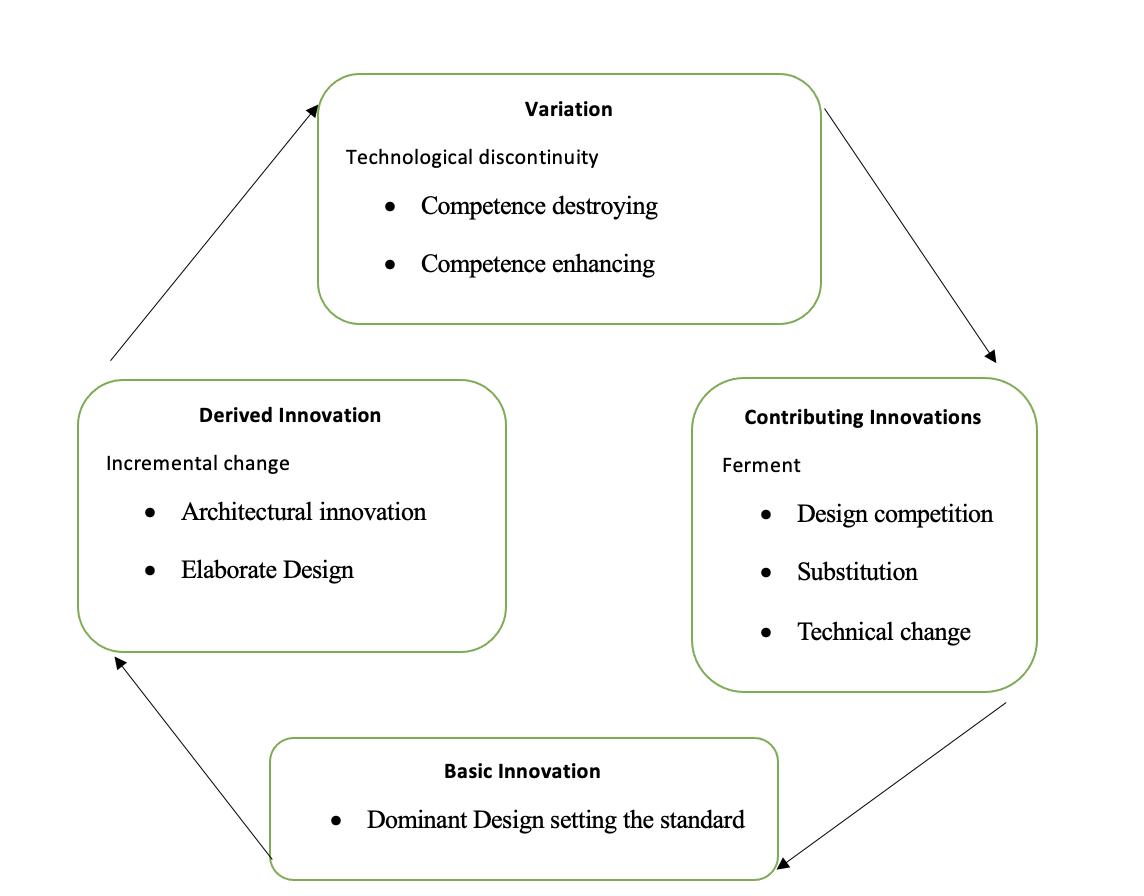

The technical cycle of Anderson & Tushman

Anderson & Tushman’s technology cycle

Discontinuity

A technological discontinuity is defined as an innovation or disruption that significantly alters the performance trajectory of a market or industry (Anderson & Tushman, 1990). It sets a new bar for product capabilities that renders the engineering methods and designs of the past outdated. Rather than allowing for incremental gains, discontinuities allow for 10x or higher benefits. Film-based imaging has come to an end with the advent of digital photography. Digital sensors, memory cards, and software processing revolutionized basic product features like capacity, flexibility, and sharing. Film cameras became functionally obsolete due to this discontinuity, which also sped up the demise of businesses like Kodak, that relied on conventional film technology. In this sense, discontinuities reshape consumer demand and force businesses to reevaluate their entire product design to stay up with emerging technologies.

Periods of ferments

Ferments define periods of high experimentation and volatility when new technologies appear, but prevailing designs have yet to stabilize (Suarez & Utterback, 1995). As developers and entrepreneurs investigate possibilities, many variations arise without established rules. Organizations seek a variety of strategies in an attempt to influence future dominance since there is a great deal of uncertainty. Such ferment is exemplified circa 2009 by the Cambrian boom of smartphone operating systems. Technical divides resulted from the supremacy competition between iOS, Android, WebOS, Windows Phone, and Blackberry OS. App developers faced strategic difficulties in selecting platforms due to the absence of a dominant design. After all, Android and iOS became the dominant platforms, with Android offering an open-source substitute. While many options postpone standardization, fermentation phases allow for significant innovation. The shift towards incremental improvement begins when the volatility calms and consolidation occurs around a dominating architecture.

Incremental change

Minor, long-lasting innovations that progressively enhance a product’s features and performance without fundamentally changing its core design are called incremental changes (Tushman & Anderson, 1986). After a dominant design is established, businesses incrementally innovate while maintaining the fundamental architecture and bolstering competencies that align with that Design. For instance, every new model year in the vehicle sector brings minor styling adjustments, increased fuel efficiency, and new features like electronics or safety sensors. The fundamental internal combustion engine and front-engine, rear-wheel drive paradigms have been the same. While frequently struggling with discontinuous change, incumbent enterprises excel at incremental innovation. Retaining leadership demands balancing internal disruption and more significant exploratory innovation in addition to incremental advantages. Although over-reliance on incremental change can lead to rigidities that make businesses vulnerable to architectural innovation, incremental modification does improve current products.

Dominant Design

Although the WIMP model has been dominant, new technologies point to a coming paradigm change (Yoo et al., 2010). Disturbances causing ferment include distributed artificial intelligence, augmented reality, gestural and voice-based interfaces, ultraportable and wearable technology, and Lyytinen & Rose (2003). These components might be combined into a new dominant design to form an ambient computing environment focused on edge analytics, 5G connection, mobile AI assistants, and ubiquitous displays. To build an experience where interactions flow naturally across devices and interfaces, this fundamentally changes the desktop paradigm of the past. Nevertheless, there are challenges related to user uptake, solution integration, and legacy compatibility when moving away from the well-established WIMP architecture. To replace current standards, the next dominant Design needs to provide significantly more utility.

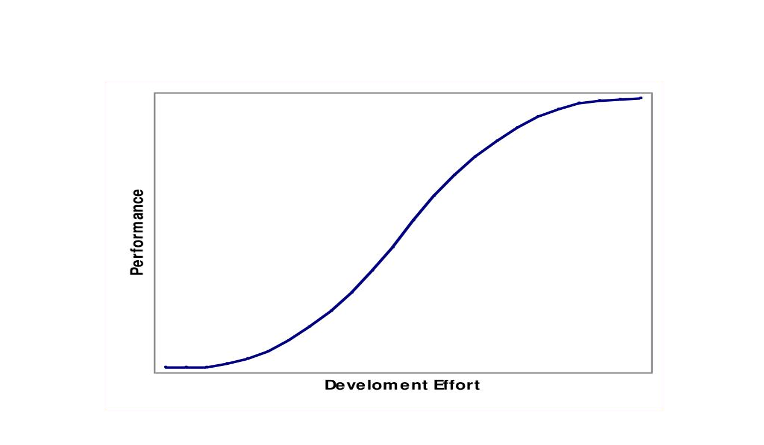

S-curve in Technological Improvement

Performance characteristics

The exponential improvement and slow development of new technologies are depicted by the S-curve (Christensen, 1992). Development moves slowly because of the early, low-resolution designs and restricted functionality. Moore’s Law in semiconductors exemplifies how performance increases quickly as fundamental scientific concepts are better known and technological challenges are resolved (Henderson & Clark, 1990). New markets and applications can arise during this time of rapid advancement. But eventually, due to unavoidable financial and physical limitations, the rate of advancement slows down. The practical limitations of material miniaturization and production capabilities are reached. According to Modis and Debecker (1992), performance improvements occur gradually along an aging S-curve unless a disruptive new technology develops. The cycle then repeats again, with discontinuities driving the development of new S-curves that outperform earlier models to satisfy the need for better price performance.

Diverse performance measures

Technology progresses according to S-curves, although the precise performance metrics vary depending on the innovation area (Sahal, 1985). Metrics for CPUs include miniaturization, processing speed, and power efficiency. Range, fuel efficiency, payload capacity, and aircraft speed are indicators of advancement over subsequent designs. Additional quantitative metrics include output across technologies, bandwidth, cost, accuracy, storage, and dependability. Qualitative features such as feature sets, adaptability, and usability can also improve along S-curves. Performance dimensions do not, however, advance in an equal manner because improving one can jeopardize another (Mishina, 1999). For instance, while turbofan engines reduced jet top speed, they boosted fuel efficiency. Because S-curve restrictions constrain simultaneously maximising all measures, firms must strategically trade off which performance aspects to prioritise for innovation. This narrow concentration affects competitiveness since rivals aim for distinct aspects to gain a short-term advantage before performance convergence due to S-curve maturity.

Discontinuities and their impact on S-curve

According to Anderson and Tushman (1990), discontinuities occur when significant innovations cause an abrupt shift in the performance trajectory, upending long-standing S-curve assumptions. Radical new architectures develop and allow a 10x leap rather than incremental advances when progress is bound by limits of current technology (Henderson & Clark, 1990). For instance, the analog S-curve of film was surpassed by digital photography using CCD sensors. This discontinuity changed basic features, including cost per image, shareability, and quick viewing. Establishments clinging to the traditional S-curve find changing challenging as startups forge new avenues. Discontinuities set off a fresh learning cycle and a higher ascent of the S-curve, which continues until intrinsic limitations return. Once dominant designs come together, the cycle is repeated. Consequently, discontinuities are essential breaks that keep an S-curve from becoming permanently locked in. They upend the market and technological forces that solidify the status quo and make room for new breakthroughs that spur advancement.

Adopter categories

Innovators

Technology enthusiasts who are fearless in taking chances with novel but unproven ideas are innovators. Innovative research facilities are at the forefront of this technology, inventing novel methods, and startup businesses are creating goods and business plans centered around it. Despite the developers ‘ delight, the technology hasn’t yet been widely adopted by the general public. It may not have crossed the chasm into general use because early adopters and the early majority are not totally on board (Moore, 1999). Worries about cost, learning curve, compatibility, and reliability hamper adoption on a broader scale. Innovation spreads slowly and stays within the innovator community without bridging the gap unless usability and integration difficulties are addressed to produce comprehensive solutions that resonate with pragmatists (Rogers, 2003). It’s important to remember that although early adopters of the technology are quick to embrace it, mainstream adoption has not occurred until concerns about uncertainties and constraints are resolved, resulting in the ecosystem and comprehensive solutions required for gradual adopters. Attaining critical mass will take more effort.

Early adopters

Pioneers who prioritize strategic effect over short-term financial gain are known as early adopters. Some forward-thinking business titans are among the early users of this technology, testing its application to obtain a competitive advantage. But most businesses continue to exercise caution, holding off on adopting until the technology is more turnkey. Since there has yet to be much testing from early adopters, it has probably not crossed the chasm (Moore, 1999). According to Rogers (2003), in order for the technology to become widely used, it must be easier to integrate into current workflows and systems, provide more comprehensive solutions rather than individual components, and have ecosystem support. These disparities mean that adoption has yet to spread to pragmatists but remains confined to innovative organizations. In bridging the gap, technology must be adapted to the early majority’s needs, which has yet to happen, in order to satisfy their desires for convenience, usefulness, and dependability.

Early Majority

The early majority looks for complete, well-proven answers with little room for doubt. The early majority has yet to adopt this technology widely due to issues with integration, usability, and transfer from legacy systems (Rogers, 2003). While some progressive corporations are testing it out, most pragmatic businesses are holding off on making a complete commitment until the ecosystem has had time to grow and problems ironed out. The technology still needs to be fully translated into solutions fit for large-scale implementation, only innovative testing (Moore, 1999). Technology has probably not yet crossed the line from visionaries to conservatives since the early majority prefers gradual advancement over radical innovation. Until the early majority embraces the technology rather than just experimenting, more gradual improvements in scalability, reliability, and backward compatibility are required.

Late majority

Adopting innovations only after they become widespread, the late majority are skeptics. Adoption by the late majority is still hampered by concerns about this technology’s dependability, learning curve, and possible obsolescence. Technology has probably not advanced to the point where the late majority, who value tried-and-true standards above novel ideas, would embrace it (Moore, 1999). According to Rogers (2003), the late majority believes that technology adoption occurs only when supplementary products and supporting infrastructure reach a fully developed stage and the technology becomes essential for sustained competitiveness. With technology still developing and becoming more specialized, the late majority finds it difficult to adapt without a strong need or sufficient support network for cautious users. Providing the dependability, compatibility, and ecosystem that the late majority mainstream demands is necessary to bridge the gap, moving beyond innovators.

Laggards

Laggards are extremely cautious adopters who only accept new technology once it has become the norm. Laggards view this innovation as highly unclear and need the means to quickly adopt it (Rogers, 2003). Significant defects, expenses, and compatibility problems still exist, particularly for underdeveloped markets that are typical of laggards. Due to these obstacles, it is highly unlikely that technology has advanced to laggard segments (Moore, 1999). Adoption only occurs for laggards when systems are turnkey, low costs, and defects are eradicated. Before innovation is adopted, laggards require support services to be easily accessible and simplified. The technology hasn’t yet closed the gap between inventors and early adopters because of its ongoing limitations and the necessity for efforts to encourage laggard adoption.

Different innovations, first movers, and market leaders in Virtual Reality

Innovations

A recent wave of hardware and software advancements in virtual reality (VR) aims to reduce cumbersome headsets while increasing the experience’s realism . The major advancements include accurate head and motion tracking for realistic perspective and interaction, higher resolution displays and optics for visual quality, and better CPUs for rendering complex worlds (Steuer, 1992). Multi-user VR platforms like Meta use avatars and virtual environments to provide cooperative experiences. Burdea and Coiffet (2003) state that haptics and controllers provide natural input techniques and tactile feedback. Users may experience real-world scenarios, such as concerts or tourism attractions, in photorealistic 3D-captured settings. Experiences become social and adaptive with AI-driven interactive characters. While advancements in VR strive to produce credible sensory illusions that are indistinguishable from reality, they also need to balance realism, accessibility, and playability. More sophisticated physics simulations, networked virtual environments, and mobile device accessibility can be the main innovation areas as underlying technology advances. Making immersive virtual experiences that are both captivating and challenging is still the fundamental obstacle.

First movers

In the 1960s and 1980s, pioneers like Ivan Sutherland and Jaron Lanier conducted the early research that advanced virtual reality (Sutherland, 1965; Lanier, 2001). VPL Research and Autodesk were among the first businesses to commercialize basic VR systems. Organizations like NASA and the University of North Carolina at Chapel Hill advanced VR applications to expand the field and made advancements public in the 1990s (Blascovich et al., 2002). In recent times, Palmer Luckey created the first Oculus Rift prototype in 2012, igniting the current consumer VR boom. Inventive companies like Magic Leap, HTC Vive (in collaboration with Valve), and Oculus (bought by Facebook) want to bring virtual reality (VR) to the masses. Other companies that make cutting-edge VR gear and content include VirZoom and Survios. Often led by visionary founders, these early adopters take chances with novel technologies. Even if some lose their appeal fast, larger corporations like Sony, Microsoft, and Samsung incorporate their innovations into their platforms to create VR that is widely available and gets better with time. VR advances are fueled by the interaction of innovative startups with established companies eager to acquire and expand cutting-edge technologies.

Market leaders

Although small entrepreneurs first drove VR innovation, big IT corporations have taken the lead in the market by purchasing these startups and using their resources to grow. In 2014, Facebook paid $2 billion to acquire Oculus, and since then, it has reduced consumer prices while investing in hardware and content revisions (Boland et al., 2022). Sony enhanced the gaming experience by bringing their PlayStation platform to virtual reality. Microsoft introduced gaming headsets for PC and Xbox. Samsung and Oculus collaborated on the Gear VR smartphone headset before working with HTC to create standalone models. Apple entered the VR market later than other companies, but given its ecosystem and user base, its eventual ubiquity is inevitable (Liu, 2022). Throughout the next ten years, these large tech companies hope to achieve widespread adoption of VR headsets through content collaborations, subsidized pricing, and constant advancement. Chinese companies that aim for lower-cost mass adoption in home markets include Pico and DPVR. The industry leaders’ financial resources, complementary assets, and strategic vision may ultimately lead to the general acceptance of virtual reality.

The future of Virtual Reality

Over the next five to ten years, virtual reality will become a widely used consumer technology as costs fall down and content libraries grow (Rauschnabel et al., 2022). In terms of use for gaming, watching media, interacting with others, and having immersive experiences, dedicated VR headsets will soon match smartphones. Lightweight mobile VR devices will be possible because cloud computing offloads computation and storage. 5G networks will facilitate cross-device interoperability and real-time rendering (Boland et al., 2022). Using speech, eye tracking, and gesture-based natural interfaces will drive the evolution of input techniques. AI customization will customise experiences, and predictive analytics will make environments more responsive. AR integration will allow digital information to be superimposed on real-world views through ordinary glasses and lenses as technology continues to shrink. However, many issues remain to be resolved, including content creation, nausea and vertigo, mismatches in perception, shared device cleanliness, privacy, and the possible psychological effects of extended immersion.

Over time, virtual and augmented reality will revolutionize fields such as education, healthcare, entertainment, Design, and workplace cooperation (Bower et al., 2022). Rigid meetings without business travel will be possible with virtual telepresence. Doctors can access patients’ anatomical data and practice complicated surgeries during treatments. Classrooms will virtually explore time and space through virtual field trips. Should augmented and virtual realities surpass actual reality, there is a chance that society would become even more divided and isolated (Madary & Metzinger, 2016). As technology advances, significant ethical and philosophical issues will be raised by this possible alienation from our natural surroundings and societies. Extended reality has many appealing features, such as improved connectivity across geographical boundaries, operational efficiencies, and experiential opportunities. However, its deeper sociological and psychological implications should be carefully considered before developing extended reality technology.

Conclusion

The first mass-market consumer virtual reality headsets were produced in the 2010s, marking a significant advancement from the rudimentary experiments of the 1960s. VR has advanced through several waves of innovation, with significant contributions from corporations, startups, and pioneering research institutes. Progress in haptics, visual fidelity, tracking, and interactive content have all improved, contributing to VR’s increased level of immersion. Although the technology’s initial niche use cases were limited to industry, gaming, and research, with reduced costs and increased realism, the technology is currently on track to become widely employed. With their ability to technically and commercially expand VR, market leaders like Facebook, Sony, and others are essential to this widespread adoption. Cloud computing, 5G connectivity, augmented and mixed reality capabilities, wearable and mobile form factors, AI personalization, and cloud computing are anticipated to be included in future advances to make virtual reality (VR) ubiquitous for work, education, communication, and media consumption. Shifts in society towards virtual worlds over genuine relationships, physical side effects, psychological repercussions, and superficial content are still areas of concern. As technology increases the potential of virtual reality, responsible innovation demands considering these ethical considerations. Ultimately, extended reality has the potential to significantly impact various aspects of society, including entertainment, productivity, human-computer connection, and even social structures.

References

Boland, D. et al., 2022. Twelve years of consumer virtual reality: A review of consumer head-mounted displays’ adoption, use and impact. New Media & Society, 24(9), pp. 2992-3009.

Rauschnabel, P.A. et al., 2022. An adoption framework for mobile augmented reality games: The case of Pokémon Go. Computers in Human Behavior, 123, pp.106751.

Burdea, G.C. and Coiffet, P., 2003. Virtual reality technology. Presence: Teleoperators & virtual environments, 12(6), pp.663-664.

Sutherland, I.E., 1965, December. The ultimate display. In Proceedings of IFIP Congress (Vol. 2, pp. 506-508).

Anderson, P. and Tushman, M.L., 1990. Technological discontinuities and dominant designs: A cyclical model of technological change. Administrative Science Quarterly, pp.604-633.

Tushman, M.L. and Anderson, P., 1986. Technological discontinuities and organizational environments. Administrative Science Quarterly, pp.439-465.

Lyytinen, K. and Rose, G.M., 2003. Disruptive information system innovation: the case of internet computing. Information Systems Journal, 13(4), pp.301-330.

Yoo, Y., Boland Jr, R.J., Lyytinen, K. and Majchrzak, A., 2012. Organizing for innovation in the digitized world. Organization Science, 23(5), pp.1398-1408.

Christensen, C.M., 1992. Exploring the limits of the technology S‐curve. Part I: component technologies. Production and operations management, 1(4), pp.334-357.

Henderson, R.M. and Clark, K.B., 1990. Architectural innovation: The reconfiguration of existing product technologies and the failure of established firms. Administrative Science Quarterly, pp.9-30.

Steuer, J., 1992. Defining virtual reality: Dimensions determining telepresence. Journal of communication, 42(4), pp.73-93.

Mishina, K., 1999. Learning by new experiences: revisiting the flying fortress learning curve. In Learning by doing (pp. 145-164). Routledge.

Sahal, D., 1985. Technological guideposts and innovation avenues. Research policy, 14(2), pp.61-82.

Moore, G.A., 1999. Crossing the chasm: Marketing and selling disruptive products to mainstream customers. HarperCollins.

Rogers, E.M., 2003. Diffusion of innovations. Simon and Schuster.

Blascovich, J. et al., 2002. Immersive virtual environment technology as a methodological tool for social psychology. Psychological Inquiry, 13(2), pp.103-124.

Lanier, J., 2001. Virtually there. Scientific American, 284(4), pp.66-75.

Sutherland, I.E., 1965, December. The ultimate display. In Proceedings of IFIP Congress (Vol. 2, pp. 506-508).

Boland, D. et al., 2022. Twelve years of consumer virtual reality: A review of the adoption, use and impact of consumer head-mounted displays. New Media & Society, 24(9), pp. 2992-3009.

Liu, S., 2022. What happened to Apple’s VR/AR glasses?. Harvard Business Review, 3.

Bower, M. et al., 2022. Applications of virtual reality in the corporate sector: A systematic literature review incorporating citation network and content analyses. Frontiers in Virtual Reality, 3.

Madary, M. and Metzinger, T.K., 2016. Real virtuality: a code of ethical conduct. Recommendations for good scientific practice and the consumers of VR-technology. Frontiers in Robotics and AI, 3, p.3.

write

write