Introduction

Facial recognition technology has become increasingly prevalent in various domains, from law enforcement to consumer applications. While this technology provides efficiency and convenience, there are concerns about its impact on marginalized communities, especially minorities (Gong et al. 2020, 255). Research has indicated that when identifying individuals from racial and ethnic minority groups, facial recognition algorithms frequently display biases and inaccuracies. To enhance the accuracy and equity of said algorithms, it is emphasized that a more diverse and comprehensive set of data be utilized. Efforts for advocacy have emerged to deal with these issues and encourage equality in using facial recognition technology (Lee et al. 2019, 3). This essay presents a clear and compelling argument about the advocacy efforts to tackle biases and privacy risks on minorities, highlighting the significance of dataset diversification, policy interventions, and algorithmic improvement. By examining the insights and solutions provided by different scholars, this essay advocates for a holistic and multi-faceted approach to ensure a more equitable future for minorities.

Algorithmic improvement

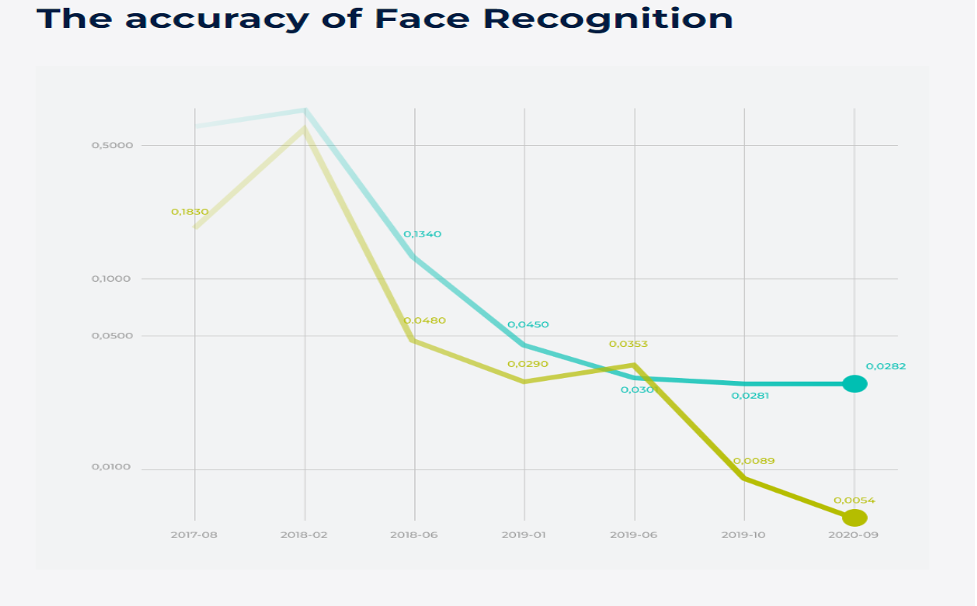

Algorithmic improvement is a significant aspect of addressing the effects of facial recognition technology on minorities. (Lee et al. 3). According to Innovatrics’ Trust Report, the accuracy of face recognition technology has improved significantly. Their analysis shows that the technology has achieved higher accuracy rates, reducing errors and improving the reliability of identification. Below is a figure that visually represents the improvement in the accuracy of face recognition technology over time. The figure shows the years on the x-axis, representing when each new algorithm was measured. Each vertical line in the graph represents the latest algorithm developed at that particular time. On the y-axis, the figure represents the accuracy ratio of the False Reject Rate (FRR) and the False Accept Rate (FAR). This ratio is used to assess the accuracy of face recognition systems, with a lower ratio indicating higher accuracy (Rusnáková 2021)

Scholars from various fields have conducted comprehensive studies that detail various strategies being developed to address this and provide vital perspectives worthy of the attention of the government and all parties involved. Among the suggested methods is Wang et al.’s information maximization adaptation network geared towards reducing racial biases linked with imaging technologies. Their approach aligns distributions of facial images from different racial groups, showing promising results in reducing bias and improving accuracy. Another study by Wang et al. (2020) on mitigating bias in Face Recognition suggests a skewness-aware reinforcement learning method to address bias. Considering class imbalance during the learning process, their approach aims to reduce bias and enhance performance, particularly for underrepresented groups (Gong et al. 2020, 255).

Dataset Diversification

Dataset diversification is another pivotal approach to tackling the adverse effects of facial recognition technology on minorities (Karkkainen and Joo 2021). Scholars from multiple academic fields like social sciences and engineering have extensively studied the issue and underscored its importance. Significantly Buolamwini and Gebru’s (2018) groundbreaking research highlights the critical need for dataset diversification as an effective means to address bias issues associated with these technologies effectively. The researchers’ study titled “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification” reveals the discrepancies in gender classification accuracy across different demographic groups. They contend that incorporating a wider variety of facial images representative of marginalized communities would help mitigate biases in algorithms resulting in vastly improved performance of facial recognition systems.

Additionally, there have been attempts to compile data sets that offer an assortment of races and ethnicities aimed at addressing under-representation in facial recognition technology which includes Parliamentary Digital Service’s Pilot Parliaments Benchmark Dataset with pictures featuring parliamentarians from numerous backgrounds (Raji et al. 2020). Through these varied datasets, machines could learn to distinguish faces from communities often left out by classification methods. Nevertheless, making data collection diverse involves difficulties and expenses, such as privacy concerns involving individuals’ permission when having their faces included while collecting representative datasets could be laborious and pricey. Therefore, it is essential to fully ensure ethical methodology when obtaining such data alongside proper documentation on labeling processes for all images used. Additionally, being mindful of securing individual privacy rights goes hand-in-hand with executing responsible use practices during surveillance (Raji et al., 2020).

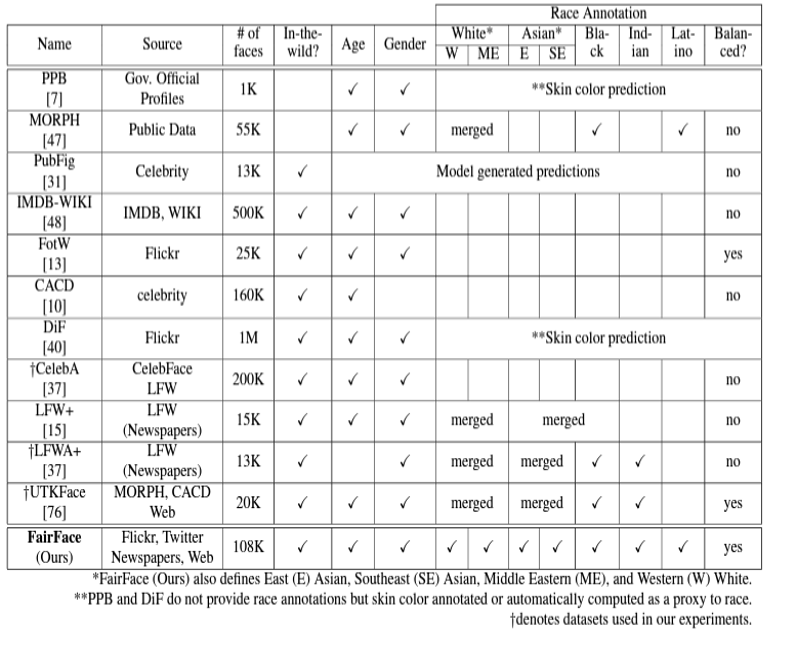

Other scholars have also emphasized the need to diversify training datasets, as shown by Buolamwini and Gebru’s study (“Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification,” 2018). Diversifying datasets with representative images can help mitigate biases and improve performance for marginalized communities. These algorithmic improvements have short-term benefits but require ongoing refinement and adaptation for long-term effectiveness. The following table by Kimmo Karkkainen and Jungseock Joo summarises the FairFace dataset. The table includes the number of images in the dataset, the distribution, and annotations for each image, including race, gender, and age.

The table shows the FairFace dataset. The FairFace dataset is an elaborate and diverse set with ideal characteristics for training and evaluating algorithms for face recognition (Kimmo Kärkkäinen and Joo 2021). Notably, the dataset has been designed with balance across race, gender, and age groups, reducing bias against any particular group of people in algorithm outcomes. The annotations for each image are also high-quality, which helps to ensure that the algorithms are trained on accurate data.

Policy Implementation

Crafting well-informed policies requires understanding the potential dangers of facial recognition technology for disadvantaged communities. Experts, by way of diverse scientific disciplines and social sciences, argue that policymakers should remain cautious. Doing so permits authorities to devote attention to ensuring marginalized voices are heard while providing solutions for all groups regarding cutting-edge technologies. For example, Nissenbaum and Introna (2010) claim that policies should prioritize privacy, consent, and accountability when protecting the rights and interests of individuals belonging to underrepresented communities.

Still, regarding privacy, The European Union’s General Data Protection Regulation (GDPR) is another notable policy intervention. The GDPR sets guidelines for collecting, storing, and processing personal data, including biometric information used in facial recognition systems. Its provisions require explicit consent, purpose limitation, and transparency, aiming to protect individuals’ privacy and prevent the discriminatory impact of facial recognition technology (Ferguson and Guthrie 2020, 105). In the United States, the Algorithmic Accountability Act (AAA) has been proposed to regulate the use of facial recognition technology. The AAA calls for companies to assess their algorithms’ fairness, accuracy, and potential biases and disclose their findings (Ferguson and Guthrie 2020, 105). This policy intervention addresses the disproportionate impact on minority communities by holding companies accountable for developing and deploying facial recognition systems.

Different studies have also highlighted the importance of community involvement in policymaking processes. Incorporating community perspectives into policymaking advocated by Ferguson and Guthrie (2020), ratifies the pressing need to validate affected populations’ needs and values. By working hand in hand with communities through robust engagement practices, policymakers can acquire greater insights into marginalized groups’ issues while forging equitable solutions.

Alongside this strategy, reducing surveillance is another good measure involving evidence-based interventions that confront biases and safeguard privacy concerns among minority users utilizing facial recognition technology (FRT). The studies have found clear evidence indicating a strong racial and gender bias in current facial recognition technology resulting in an unfair targeting or profiling of minority groups (Bacchini & Lorusso 2019). To curb such discriminatory outcomes effectively requires implementing measures that reduce indiscriminate deployment practices of FRT systems within public spaces. It is significant to note that reducing ubiquitous surveillance activities must be considered because it poses severe ethical dilemmas for minorities’ rights and interests. The increasing usage of FRT technology without appropriate consent raises serious questions about ethics and privacy (Wang et al., 2020). Therefore initiating policies and regulations that aim to restrict intrusive surveillance activities with comprehensive privacy protections are essential to safeguard the civil liberty and privacy rights of minority communities.

Policy Interventions

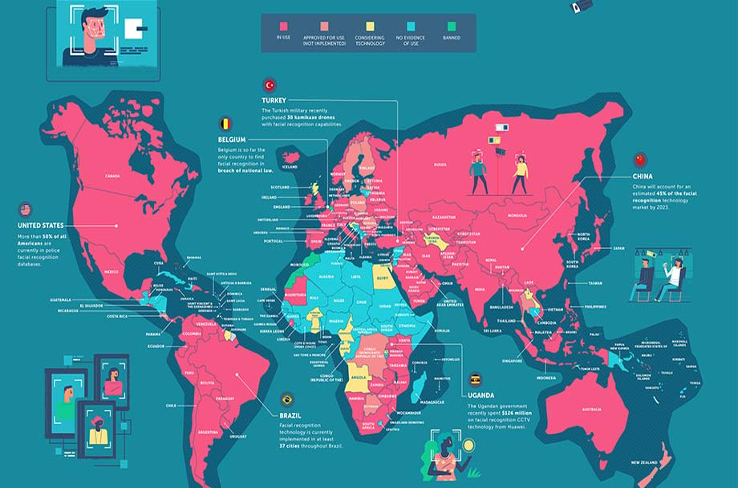

This map shows that many countries are enacting laws or regulations governing the use of facial recognition technology. These laws and regulations can help to protect privacy and civil liberties, and they can also help to ensure that facial recognition technology is used fairly and equitably (Anderson, 2023).

When policy interventions are undertaken, it is common for them to yield positive short-term outcomes as they tend to increase awareness levels while at the same time establishing legal standards. However, policy effectiveness over time depends mainly on its successful implementation and enforcement in practice. Despite potentially bringing about multiple advantages, said interventions face various challenges along the way; among them is industry stakeholders’ resistance alongside an increasing necessity for ongoing adaptation due to rapidly advancing technologies.

Therefore, implementing policy interventions involves investing resources in crafting policies that consider stakeholder input while monitoring compliance. Despite these costs, there are numerous benefits to introducing policy interventions, such as improving transparency and accountability alongside protecting individual rights. By regulating facial recognition technology, policies promote fairness by reducing prejudices that impact minorities significantly. Intervening in the effects of facial recognition technology on minority groups requires policy measures that are informed by quality research, morally conscious, and receptive to input from different participants. Academics bring vital perspectives and profound views that enhance the formulation and execution of policies, so they reasonably accommodate all society members.

Recommendation and Review

While significant efforts have been made to address the effects of facial recognition technology on minorities through dataset diversification, policy interventions, and algorithmic improvement, it can be argued that none of these efforts alone is fully effective in solving the problem. This viewpoint arises due to several challenges and limitations associated with each approach. Recognizing these shortcomings and exploring alternative and complementary next steps to advance toward a more equitable and fair landscape becomes crucial.

Although promising, Dataset diversification encounters representativeness, scalability, and ongoing maintenance challenges. Despite efforts to include a broader range of facial images, it remains challenging to capture the full diversity of racial and ethnic groups. Additionally, as demographics evolve, datasets need continuous updates to remain current and relevant. To overcome these limitations, future steps could involve expanding collaborations and partnerships with communities, research institutions, and advocacy groups to ensure ongoing collection and annotation of diverse datasets. Engaging individuals from marginalized communities in the dataset creation process can help address privacy and consent concerns and ensure ethical practices (Van 356). Policy interventions also need help implementing, enforcing, and keeping pace with technological advancements. The complex legal and political landscapes often need to be revised to ensure the swift development and implementation of robust policies.

Finally, algorithmic improvement is the most promising solution, but it can only partially solve the problem due to inherent limitations and potential unintended consequences. Although machine learning and reinforcement advancements can squash biases in some cases – biases could be embedded right within the data, which may pose a challenge (Lee et al. 3). Moreover, improving algorithms frequently requires expertise, high computing power loads, and continued modifications. Mitigating these challenges requires ongoing research into alternative algorithmic practices that are interpretable and explainable models that could help reduce bias while promoting transparency. Also, fostering collaboration between researchers, ethicists, and industry experts can lead to the development of comprehensive guidelines for responsible algorithmic deployment (Lee et al. 3). In light of the limitations of individual efforts, a holistic and multi-faceted approach is necessary.

Conclusion

In conclusion, addressing the impact of facial recognition technology on minorities requires a multi-faceted and holistic approach that combines dataset diversification, policy interventions, and algorithmic improvement. Each of these efforts has its strengths and limitations, emphasizing the need for collaboration, ongoing refinement, and adaptation. Dataset diversification aims to mitigate biases by including a more comprehensive range of facial images, but challenges such as representativeness and scalability must be addressed. Policy interventions provide legal frameworks and accountability, but implementation and enforcement can be challenging in a rapidly evolving technological landscape. Algorithmic improvement can reduce bias, but inherent limitations and unintended consequences necessitate ongoing research and responsible deployment.

Furthermore, alternative and complementary next steps are needed to advance toward a more equitable and fair landscape. Expanding collaborations and partnerships with communities and research institutions can ensure ongoing collection and annotation of diverse datasets, addressing privacy and consent concerns. An interdisciplinary collaboration involving policymakers, legal experts, technologists, and community representatives can develop comprehensive and adaptive policies. Continued research into alternative algorithmic approaches and collaboration between researchers, ethicists, and industry experts can lead to responsible and transparent algorithmic deployment.

Therefore, it is essential to recognize that more than these efforts alone can fully solve the problem. A comprehensive approach that integrates these efforts fosters collaboration and prioritizes inclusivity, transparency, and accountability is necessary. Ongoing monitoring, evaluation, and audits of facial recognition systems and focusing on minimizing harm, protecting privacy, and promoting social justice are crucial. By embracing this comprehensive approach and considering the needs and aspirations of affected communities, significant progress can be made in mitigating the effects of facial recognition technology on minorities and promoting a more equitable future.

Works Cited

Anderson, Larry. “Map Illustrates Usage of Facial Recognition around the World.” Sourcesecurity.com, 2023, www.sourcesecurity.com/insights/map-illustrates-usage-facial-recognition-world-sb.1591782979.html?utm_source=SIc&utm_medium=Redirect&utm_campaign=Int%20Redirect%20Popup. Accessed 24 May 2023.

Bacchini, Fabio, and Ludovica Lorusso. “Race, again: how face recognition technology reinforces racial discrimination.” Journal of Information, communication, and Ethics in Society (2019).

Buolamwini, Joy, and Timnit Gebru. “Gender shades: Intersectional accuracy disparities in commercial gender classification.” Conference on fairness, accountability, and transparency. PMLR, 2018.

Ferguson, Andrew Guthrie. “Facial recognition and the fourth amendment.” Minn. L. Rev. 105 (2020): 1105.

Gong, Sixue, Xiaoming Liu, and Anil K. Jain. “Jointly de-biasing face recognition and demographic attribute estimation.” Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXIX 16. Springer International Publishing, 2020.

Introna, Lucas, and Helen Nissenbaum. “Facial recognition technology a survey of policy and implementation issues.” (2010).

Karkkainen, Kimmo, and Jungseock Joo. “Fairface: Face attribute dataset for balanced race, gender, and age for bias measurement and mitigation.” Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 2021.

Kimmo Kärkkäinen, and Jungseock Joo. FairFace: Face Attribute Dataset for Balanced Race, Gender, and Age for Bias Measurement and Mitigation. Jan. 2021, https://doi.org/10.1109/wacv48630.2021.00159. Accessed 24 May 2023.

Kortli, Yassin, et al. “Face recognition systems: A survey.” Sensors 20.2 2020: 342. https://doi.org/10.3390/s20020342

Lee, Nicol Turner, Paul Resnick, and Genie Barton. “Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms.” Brookings Institute: Washington, DC, USA 2 2019.

Raji, Inioluwa Deborah, and Genevieve Fried. “About face: A survey of facial recognition evaluation.” arXiv preprint arXiv:2102.00813 2021.

Raji, Inioluwa Deborah, et al. “Saving face: Investigating the ethical concerns of facial recognition auditing.” Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society. 2020.

Rusnáková, Barbara. “How the Accuracy of Facial Recognition Technology Has Improved over Time.” Innovatrics, 20 Mar. 2021, https://innovatrics.com/trustreport/how-the-accuracy-of-face-recognition-technology-has-improved-over-time/

Van Noorden, Richard. “The ethical questions that haunt facial-recognition research.” Nature 587.7834 (2020): 354-359.

Wang, Mei, and Weihong Deng. “Mitigating bias in face recognition using skewness-aware reinforcement learning.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020.

Wang, Mei, et al. “Racial faces in the wild: Reducing racial bias by information maximization adaptation network.” Proceedings of the IEEE/cvf international conference on computer vision. 2019.

write

write