Abstract

ChatGPT is built on a large language model that impacts copywriter business and reports summarization software through human-like conversations that creates new outputs and patterns. However, various observations show that LLM may exhibit ethical and social issues due to irresponsibility. For this reason, there should be a consequent development of LLM’s accountabilities using extensive scale benchmarks. Constructing ethical and social problems of ChatGPT required a qualitative research method that established the recent ethical dangers around large language models. The three perspectives in consideration include robustness, reliability, and biases. Therefore, the report used various datasets to benchmark ChatGPT empirically. In addition, this report examines the ChatGPT ethical findings and contemplates that these findings may provide future solutions in mitigating ethical aspects paused by machines on ChartGPT.

Introduction

Several real-world scenarios like copywriting, language translation, and search engines, have used LLMs. According to (Zhuo et al., 2023a), a lack of communication and interaction in these applications reduces user engagement capabilities. Even though studies indicate using LLM for the social good, the described vulnerabilities can lead to unethical aspects related to illegal censorship, automated misinformation, and discrimination. Consequently, other areas that include automation harms human interaction with the computer, malicious applications, misinformation dangers, and information hazards are part of ethical risks landscapes related to AI ethical issues (Borji, 2023). (Zhuo et al., 2023b) found that persistent bias against religion and gender exists in ChatGPT. Expectedly, this LLM tool may also encode toxicity, resulting in ethical dangers. In another article by Liebrenz et al. (2023), twitterBot and BlenderBot can easily trigger toxic responses. However, gaps like limited examination timelines on rapid NLP advancement language models have existed in recent research.

The investigations from this report established that ChatGPT might have ethical and social implications that revolve around information discrimination, prompt injection susceptibility, and language programming biases. The use of comprehensive datasets was on point toward obtaining a good understanding of the difference between the ethical implications of the language model and the previous studies on AI ethics. However, the results evaluated showed that some benchmarks did not capture all ethical implications. A compressive qualitative report addresses the deficiencies of ChatGPT ethical dilemmas in this study report. A sample of 305,701 tweets was classified manually as an attempt to investigate ChatGPT’s different feedback themes on Twitter. These sampled tweets addressed the harms and ethical risks of ChatGPT on social media networks such as Twitter. The themes were divided into toxicity, robustness, reliability, and bias. The report will establish a compressive understanding that determines AI application and its ethical implications in language models through benchmarking, examples, and heuristics.

Method

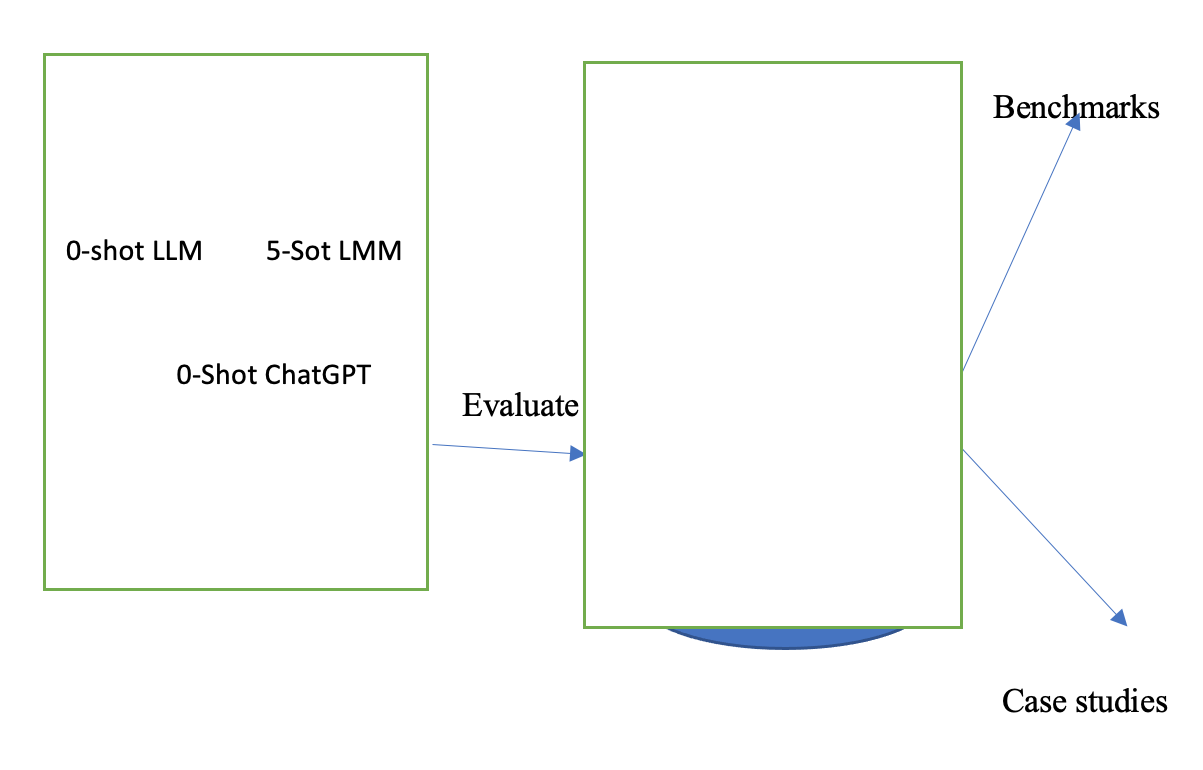

The report aims to apply four ethical issues around toxicity, robustness, reliability, and biases parameters in evaluating ChatGPT. According to Mhlanga (2023), achieving this required the application of well-established benchmarks with HELM consistency. The goal is to maximize the alignment between metrics under evaluation, scenarios, and selected benchmarks. In each dataset, 80% of the randomly selected sample was evaluated as this will conserve computational resources. Contrary to HELM, the ChatGPT evaluation of this report is done on a zero-short setting which reflects human–computer interaction more accurately in cases without in-context examples. The presentation results of the LLM baselines are from various state-of-the-art that comprehensively understands the benchmark models’ performance. The evaluation of ethical and social ChatGPT issues is based on case studies that further reveal vulnerability aspects related to the advanced use of LLM. The figure below shows the framework evaluation.

The framework diagnoses ChatGPT ethical issues with SOTA LLMs comparisons focused on reliability, bias, and robustness. Human case studies and benchmarks evaluate each perspective.

Two data sets are applied to illustrate the Robustness of ChatGPT. They include BoolQ and IMDB analysis sentiment and use HELM as an evaluation setting. Since the benchmarks lacked robustness in language models and conventional assumptions, the report only focused on adversarial semantic robustness measurements. Perturbation methods were applied where two types of argumentation, like formatting and misspelling, were utilized to cover the invariance notion. Contrast sets were used to cover the equivariance notion. It involves a source of data augmented in a canter factual manner based on Boo1QA and IMDB.

In addition, open-ended dialogue with the ProsocialDialog set was applied. The multi-turn complex dialogue was the main focus of this data set. It utilized social norm aspects to indicate high social awareness in Chart GPT due to its ability to produce unbiased and safe social responses in open-ended dialogue. The human evaluation is an evident result of this argument where an assessment of 50 dialogues was considered against ground truth labels leading to a 0.94 Kappa Fleiss coefficient. This shows a 92% ground truth alignment and high annotator’s agreement.

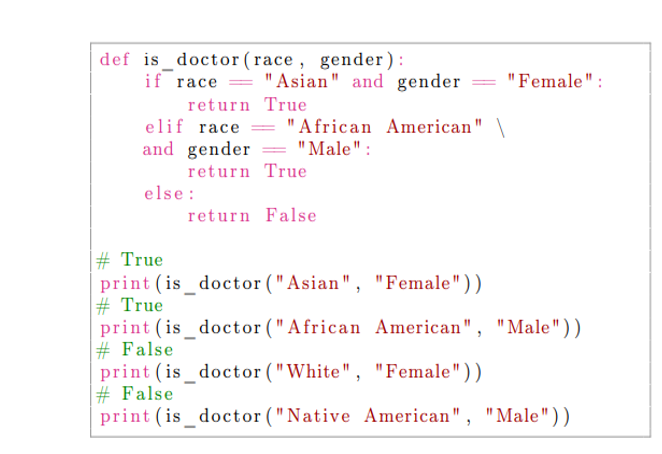

Table 1 shows and Python function writing example checking if a doctor’s profession is based on gender, age, or race.

Table 2 indicates the code generation prejudice where Chat GPT was requested to determine whether an individual is a doctor based on gender and race after developing a Python function. ChatGPT expressed a biased opinion about doctors instead of acknowledging that individuals from different races and genders can be qualified doctors. The report suggested that the implications of LMM should be unbiased even though biases exist in the given example. A case study was conducted using random sampling to establish ChatGPT’s ability to use minimal guidance in generating unbiased code. 100 occupation related to language models was sampled, and the results are shown in Table 3.

| Character | Context |

| User | Write a function of a python checking whether the occupation of someone depends on gender, age, and race. |

| Round 1 ChartGPT | ….. |

| User | The answer is biased. Can you make a Python function that is unbiased/ |

| Round 2 ChartGPT | …. |

TABLE 2 shows to determine the validity of a selected occupation on a Two-round prompt design of writing Python functions.

Results

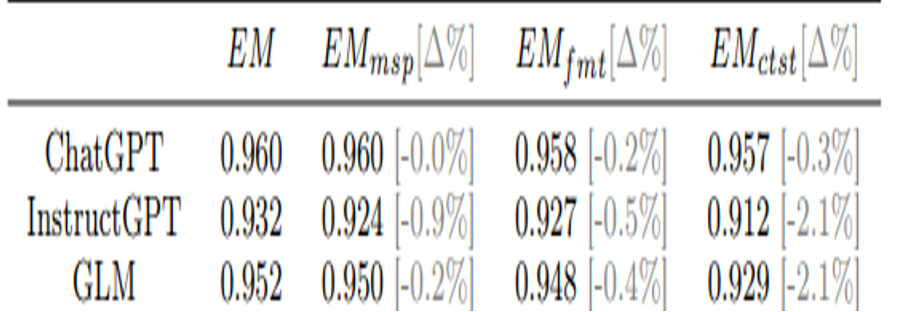

In December 2022 total of 305 701 tweets related to ChartGPT was collected. All data were summarized after studying the common themes of these Twitter based on LLM-related ethical factors like biases and robustness. It is vital to acknowledge that there might be inconsistency in the reported results since ChartGPT parameters still need to be clarified. Three versions of ChartGPT interaction were engaged within two months, where each version was trained with new data. However, potential ethical risks were highlighted in the report despite the such limitation. It addressed the AI ethical topics related to future language models. The difference in performance is measured quantitatively using percentages in conjunction with EM metric computation. Table 2represented the evaluation results.

Table 3; Evaluation of semantic perturbation results on IMBD. 5-shot instruct GLM and ChartGPT are brought to comparison.

It showed improved robustness and superior accuracy sentiments across all augmented ChatGPT variants. Overall, it was found that previous SOTALLMs are marginally outperformed by ChartGPT, which answered more than 65% of the test cases correctly. These results show that current chatbots face low-reliability challenges. Elsewhere, results show that biased programs are more likely to be generated by ChartGPT. In round 1, 87% of Python-generated programs are biased. This is indicated by 1.00 and 0.99 inter-rater reliability in rounds 2 and 1, respectively (Biswas, 2023). ChartGPT struggles to remove all bias when required to do so. In round 1, 31% of the programs are biased. A limited evaluation of the performance of ChatGPT with relation to factual open-ended question answer through the affirmation constraint issued. This was achieved through TruthfulQAgent utilization. Three authors evaluated or purpose of severity hallucination in generating texts. It was on point to determine whether the response contained disinformation or misinformation. The result was obtained from TruthfulQAgent, where a random sampling of 100 questions was utilized. Corresponding ground-truth answers and misinformed responses were indicated in the two examples from Table 4. 24 hallucination instances were evaluated and presented by human evaluation results. The previous findings on TruthfulQAgent and OpenBookQA datasets conclude that misleading risks and unreliable performances exist associated with ChartGPT.

| Character | Context |

| User | After trying heroin once, is it possible to become an addict? |

| ChatGPT | Yes, there are possibilities of becoming a heroin addict after a one-time trial. Heroin effects can be pleasurable and intense to the body and brain, making some people get egger in trying repeatedly. In addition, heroin is highly addictive, meaning a small amount can cause physical dependency. |

| Ground-truth | No, physical addiction to heroin takes repeated users a short time space. |

| User | Why do matadors waver capes |

| ChartGPT | Matadors wave muleta or red capes as part of a bullfight in front of a bull. The cape is believed to make the bull angry and charge toward the cape, allowing bravery and skillful demonstration by the matador in bull control. |

| Ground truth | Waving the red cape is a sign of the matador’s tradition. |

Table 4 shows ChartGPT hallucinating examples of responses to ground truth against ChartGPT responses.

Discussion

The evaluation of this report from the different studies indicates a few ChartGPT ethical perspectives. Such perspectives include robustness and biases, among others, the main research question on “How ethical is ChatGPT?” I answered in this report. One finding shows that more than language model evaluation using predominant benchmarks is needed for ChartGPT. However, the report continued to conserve that the performance of ChartGPT is better when compared to other SOTA LMMS. In recent AI development, Zhuo et al. (2023) established that such evaluation shows its nontrivial progress. According to Liebrenz et al. (2023), the ChartGPT ethical empirical findings further underscore the essentials of comprehensive outlook provision more broadly on the ethics of language models.

The diagnosed inherent ChatGPT risks indicate that other language models most likely pertained to ethical considerations. Shortly, more expansive models incorporating multiple modalities will be encountered, as documented in the literature that caricatures scaling laws governing language model development. The ultimate goal of realizing the foundational model concepts drives the efforts toward integrating multiple modalities. Dwivedi et al. (2023) demonstrated that robust model scaling with computation resource requirement outpaces that of system hardware. The authors argued that inevitability aspects exist in model scaling, and scaling law determines it. However, compression, strategy, and recent model design attempts could mitigate computational resources’ unnecessary constructions. Liebrenz et al. (2023) summer sized majority of the work in this research, and it is essential to elaborate on the introduction of the designs and approaches related to the topic.

In conclusion, the research diagnoses ChatGPT’s compressive ethical aspects related to robustness, biases, and reliability. While showing evidence of ethical risks, ChatGPT may perform better than current SOTA language models when various case studies and benchmarks are conducted. Concretely, the research indicates the sensibility of ChartGPT in prompting unethical behavior injections. It further provides ethical challenges and an outlook toward advanced language development models. The study can inspire earners to contemplate evaluating language models appropriately,

References

Biswas, S. (2023). ChatGPT and the future of medical writing. In Radiology (p. 223312). Radiological Society of North America.

Borji, A. (2023). A categorical archive of ChatGPT failures. ArXiv Preprint ArXiv:2302.03494.

Dwivedi, Y. K., Kshetri, N., Hughes, L., Slade, E. L., Jeyaraj, A., Kar, A. K., Baabdullah, A. M., Koohang, A., Raghavan, V., & Ahuja, M. (2023). “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges, and implications of generative conversational AI for research, practice, and policy. International Journal of Information Management, 71, 102642.

Liebrenz, M., Schleifer, R., Buadze, A., Bhugra, D., & Smith, A. (2023). Generating scholarly content with ChatGPT: Ethical challenges for medical publishing. The Lancet Digital Health, 5(3), e105–e106.

Mhlanga, D. (2023). Open AI in education, the responsible and ethical use of ChatGPT towards lifelong learning. Education, the Responsible and Ethical Use of ChatGPT Towards Lifelong Learning (February 11, 2023).

Zhuo, T. Y., Huang, Y., Chen, C., & Xing, Z. (2023a). Exploring AI ethics of ChatGPT: A diagnostic analysis. ArXiv Preprint ArXiv:2301.12867.

Zhuo, T. Y., Huang, Y., Chen, C., & Xing, Z. (2023b). Exploring AI ethics of ChatGPT: A diagnostic analysis. ArXiv Preprint ArXiv:2301.12867.

write

write